More about the template:

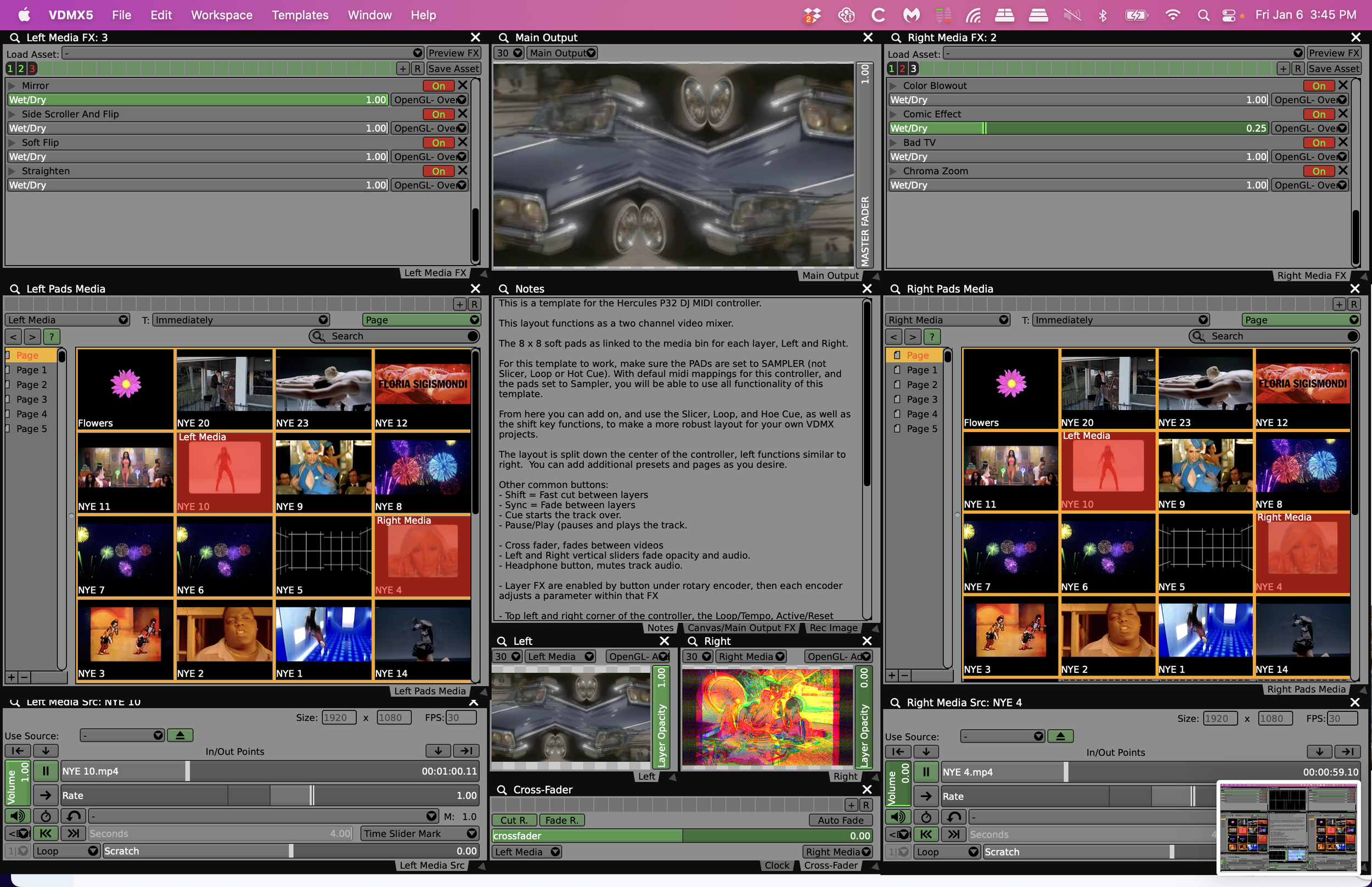

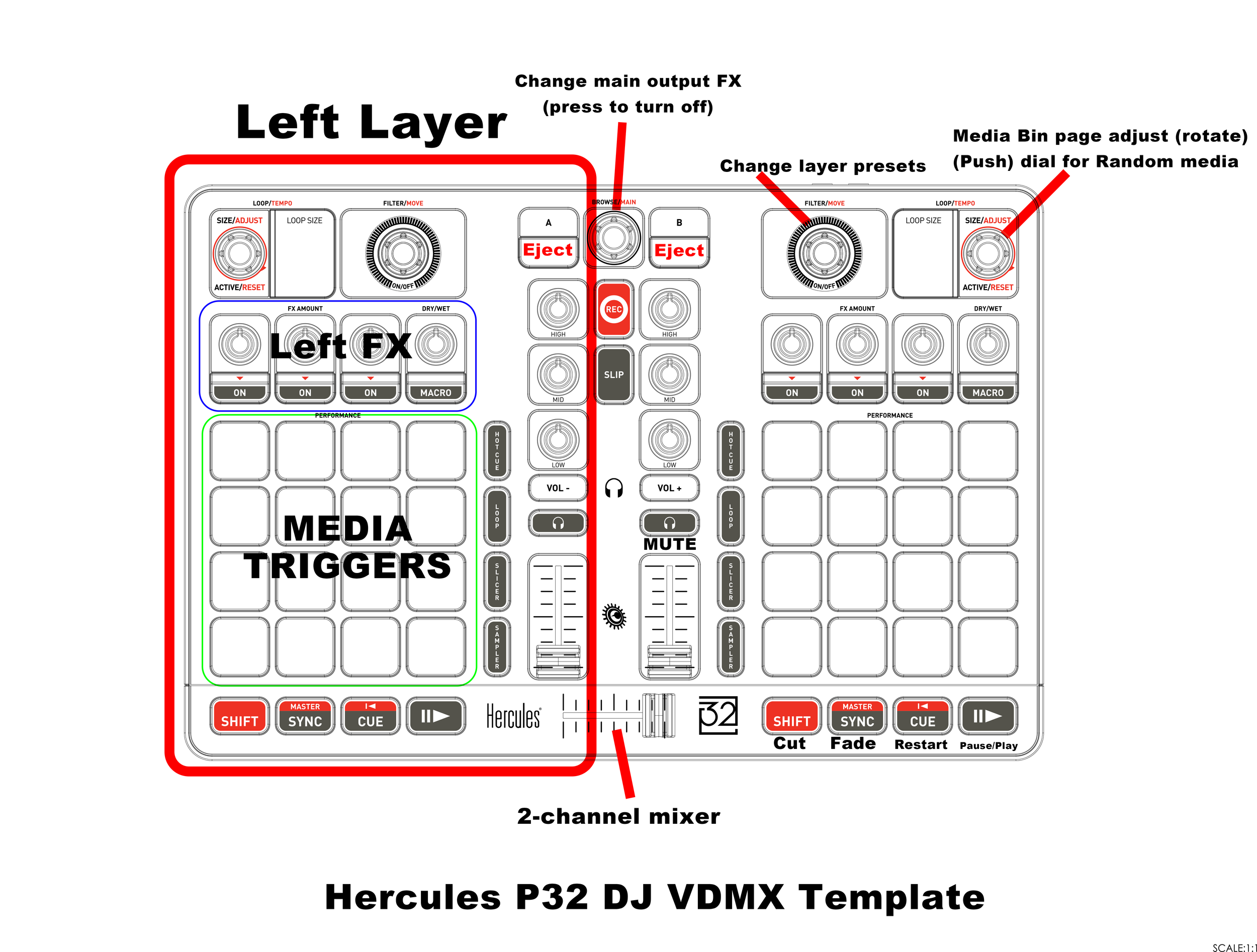

This is a template for the Hercules P32 DJ MIDI controller.

This layout functions as a two channel video mixer.

The 8 x 8 soft pads as linked to the media bin for each layer, Left and Right.

For this template to work, make sure the PADs are set to SAMPLER (not Slicer, Loop or Hot Cue). With defaul midi mappings for this controller, and the pads set to Sampler, you will be able to use all functionality of this template.

From here you can add on, and use the Slicer, Loop, and Hoe Cue, as well as the shift key functions, to make a more robust layout for your own VDMX projects.

The layout is split down the center of the controller, left functions similar to right. You can add additional presets and pages as you desire.

Other common buttons:

- Shift = Fast cut between layers

- Sync = Fade between layers

- Cue starts the track over.

- Pause/Play (pauses and plays the track.

- Cross fader, fades between videos

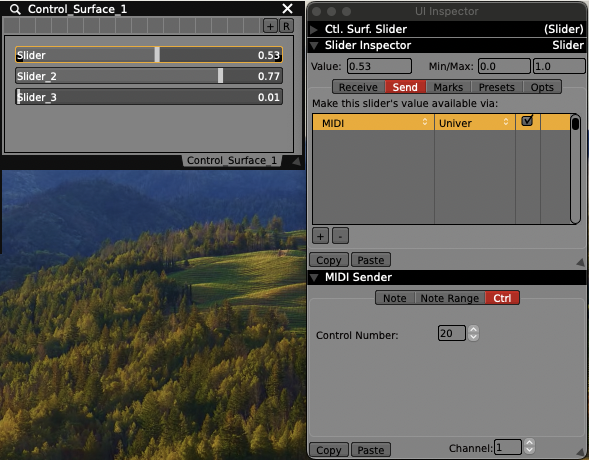

- Left and Right vertical sliders fade opacity and audio.

- Headphone button, mutes track audio.

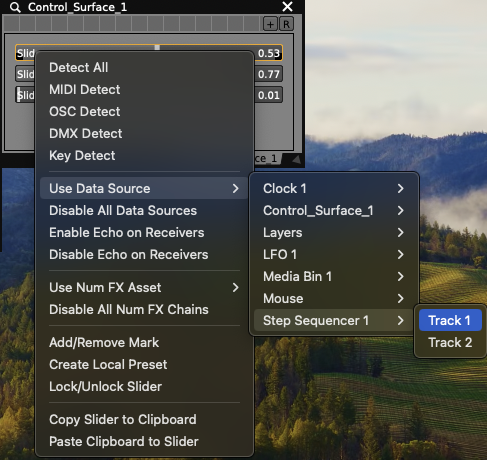

- Layer FX are enabled by button under rotary encoder, then each encoder adjusts a parameter within that FX

- Top left and right corner of the controller, the Loop/Tempo, Active/Reset buttons scroll through the media bin pages. Pressing down on this endless rotary encoder will trigger a random video from the media bin.

- To the left and right, the Filter/Move endless rotary encoder will scroll through FX presets for each layer. Be aware, that this will reset the FX each time you move to the next preset. Pressing down on this knob will jump to an empty FX off preset.

- Record button starts recording a video of the master output.

- Slip button captures an image of the master output.

-Load A and B eject the media on each side.

- Browse/Main endless rotary encoder switches between main output FX presets. Pressing down resets to an empty preset.

-High, Mid, Low rotary encoders are currently not mapped to any MIDI controls, but could be mapped to main output FX or an action of your choice.