Getting Started

1. Open the “OCR Example” template from the Templates menu.

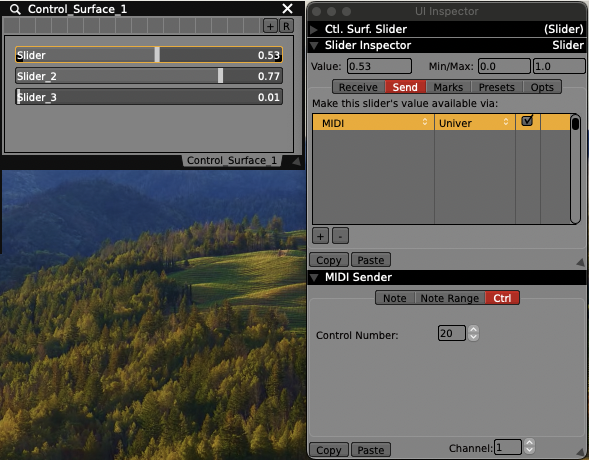

2. In the Workspace Inspector (Cmd+1), add a Control Surface plugin.

3. Create a Pop-Up Button and label its items (e.g., Red, Green, Blue).

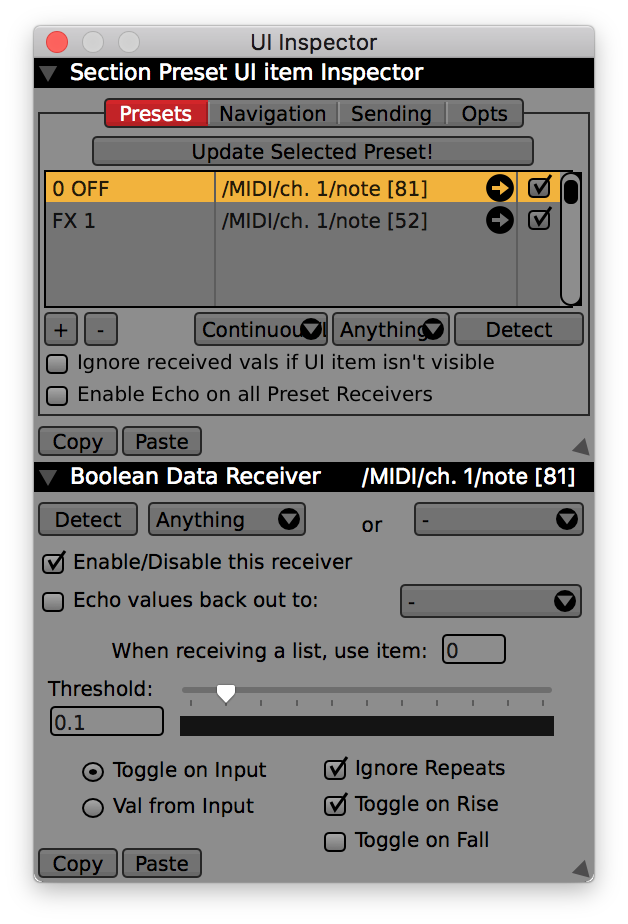

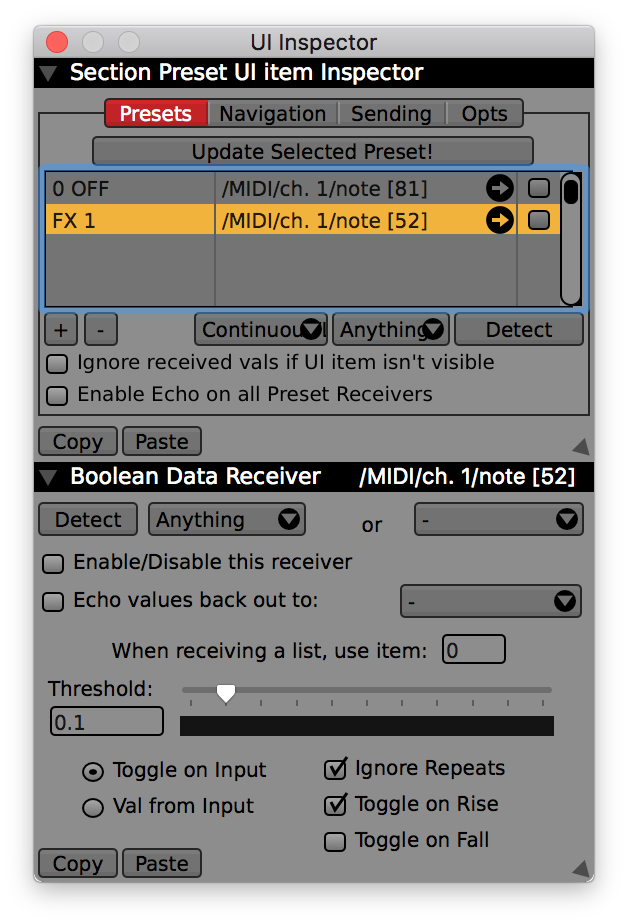

4. In the UI Inspector (Cmd+2), set the pop-up to be controlled by the OCR text string:

• Navigation > Select by string

• Data-Source > OCR Text

Link OCR to Media Playback

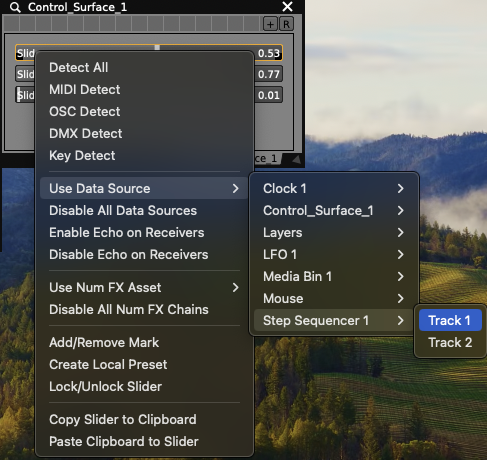

Once your pop-up button is receiving the OCR string:

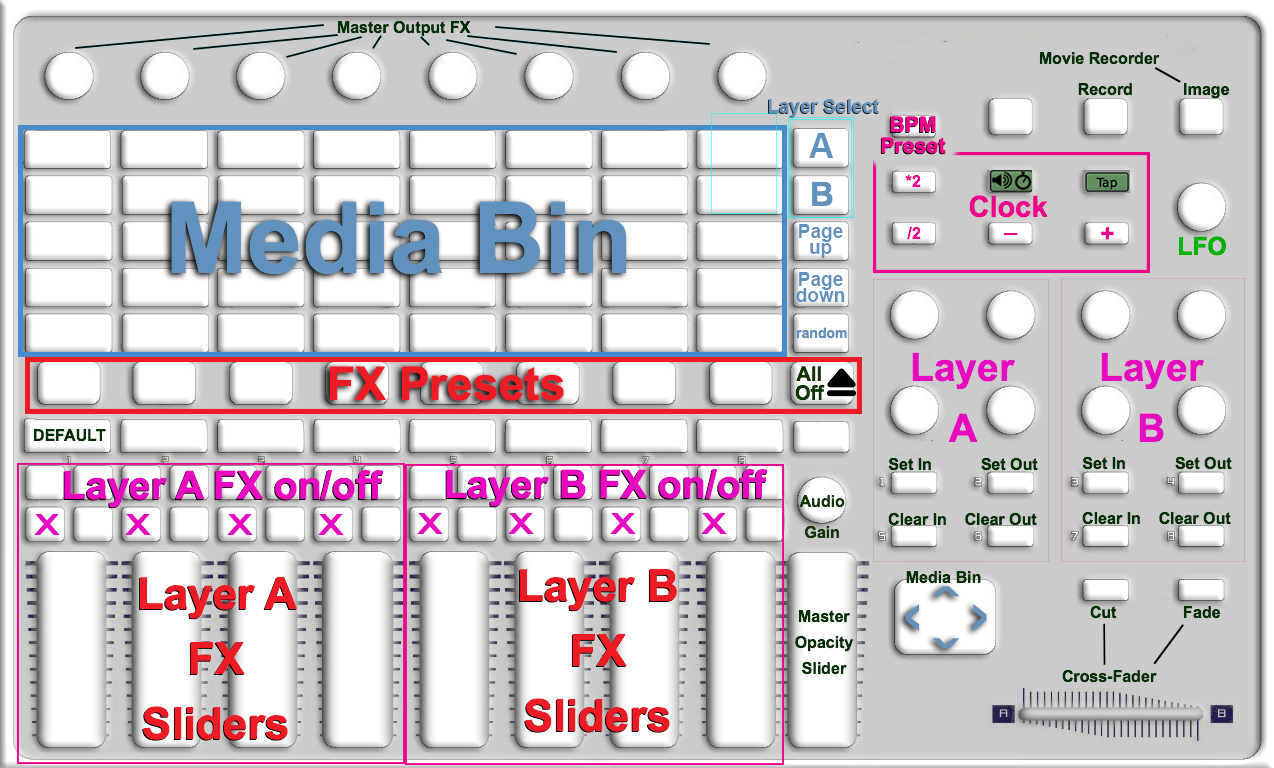

1. Go to the Media Bin Controls tab.

2. Set Trigger by Index and choose the pop-up button as the source.

3. When the pop-up changes value (based on OCR input), the corresponding clip will be triggered.

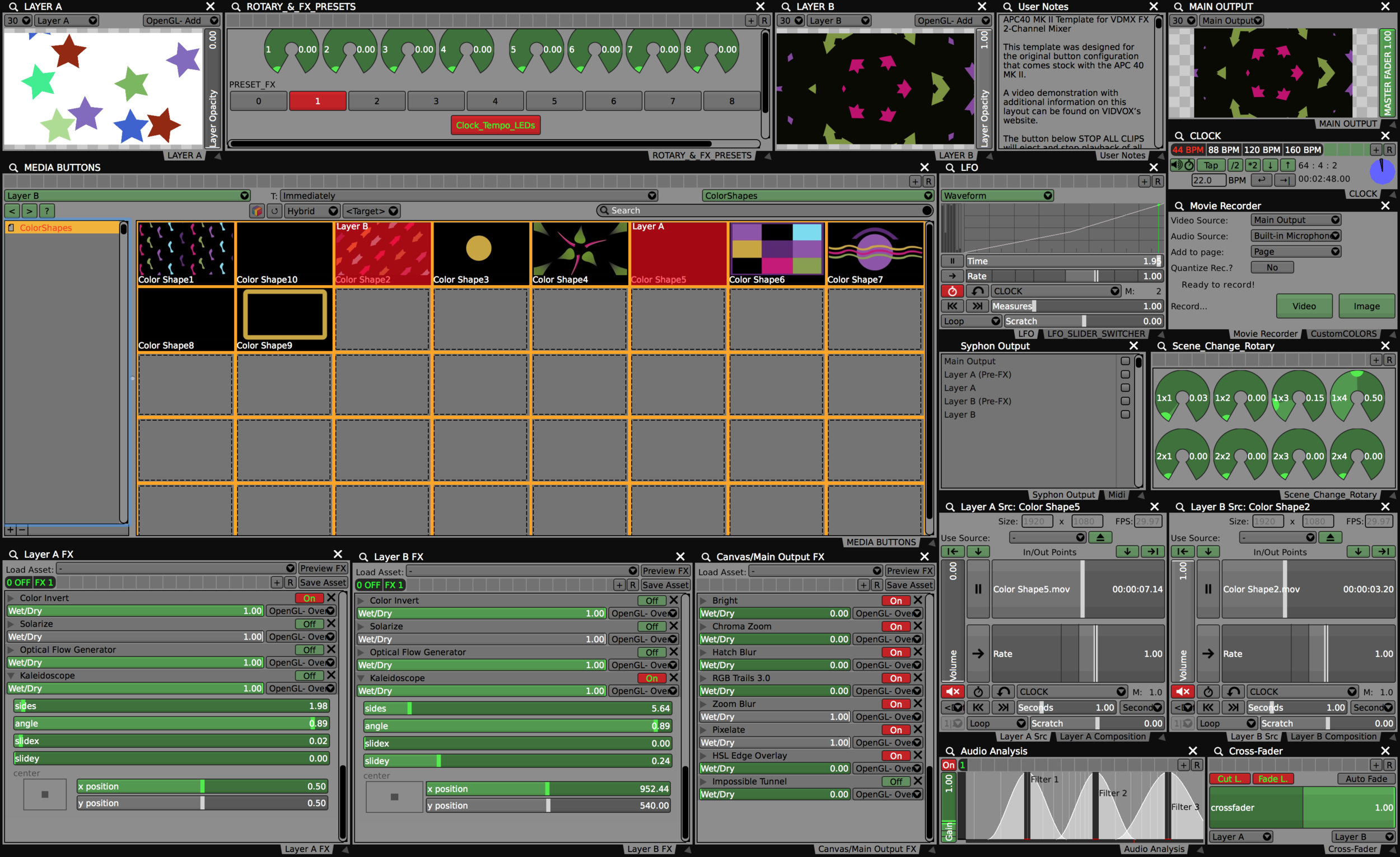

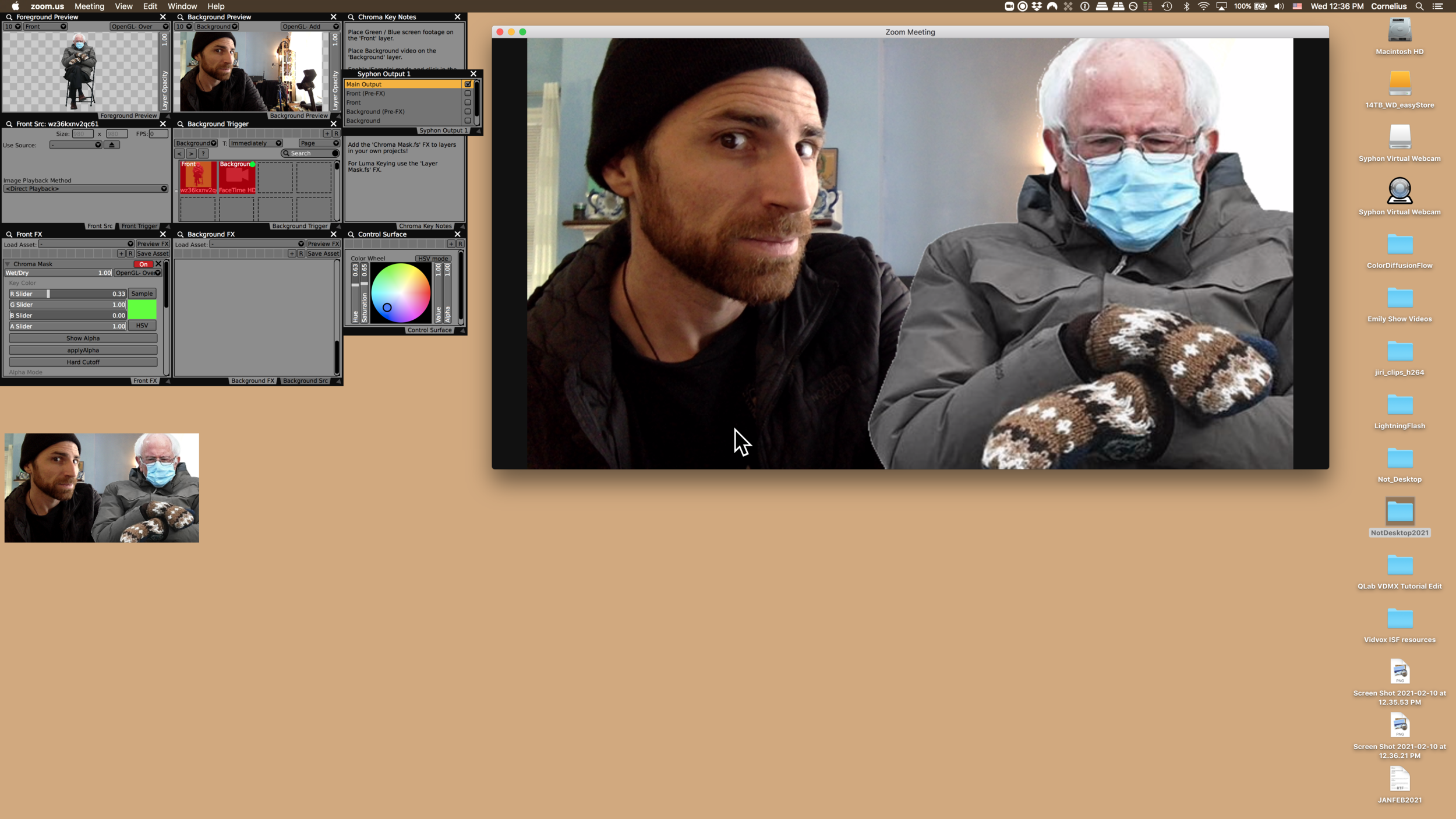

Try It Live

Switch the OCR video source to a FaceTime or external camera, then hold up QR codes or printed text. As the plugin reads values like “red,” “green,” or “blue,” it updates the pop-up and triggers the matching clip.

You can also sync scanning with the Clock plugin to automatically scan at regular intervals, creating hands-free interaction loops.

Tips & Tricks

• Case matters – OCR text strings must match your pop-up labels exactly.

• You can also scan handwritten words, printed stickers, or even project QR codes from your VDMX interface using:

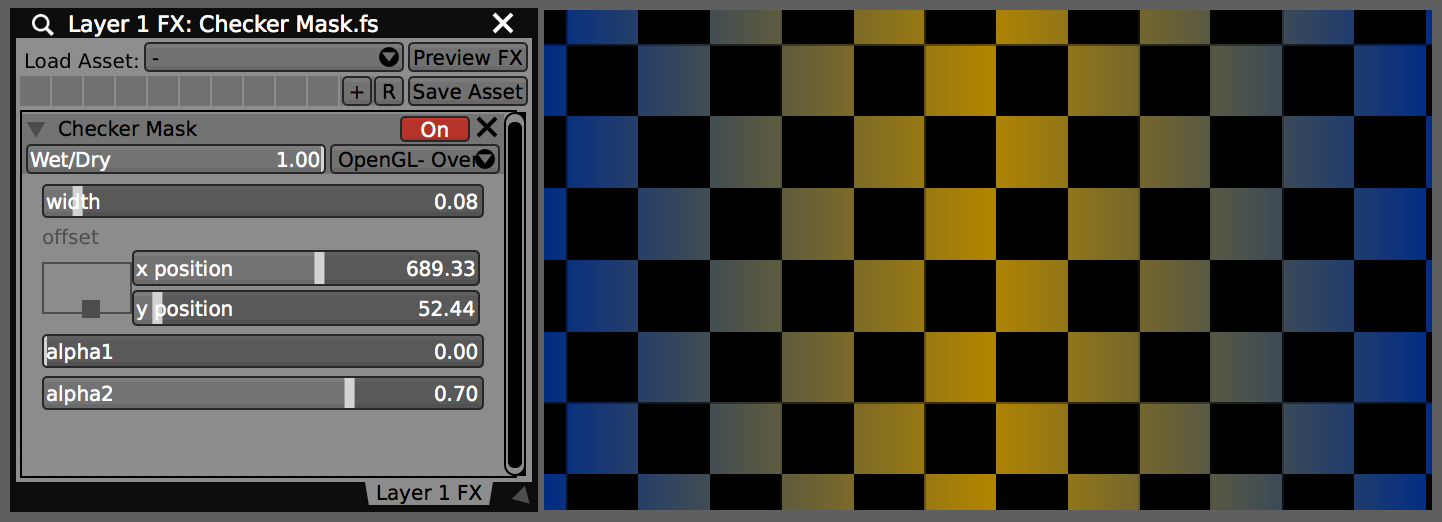

• QR Code Generator Source (creates QR codes as layer sources)

• QR Code Overlay FX (renders QR code overlays on top of any layer)