The first in a new series of conversations from VIDVOX, hosts David Lublin and Cornelius (ProjectileObjects) sit down with artists Anton Marini (Vade) and SarahGHP to talk about breaking machines, analog history, and the art of making your own tools.

Read MoreDoing visuals for your first house party? Here's how to make it happen.

How to Make This:

Feel Like This:

It’s happened before. You make video art. You have a projector. Your friend just asked you to do visuals at their house party or for their band. You have no idea where to get started.

Most people who work with live visuals didn’t get their start at the big clubs or touring with the biggest-name musicians. They have a story like this: We got our start with an old projector, running demo software, at a house party for a friend who was DJing, or in a small café on open mic night with a local band nobody had yet heard of. In this tutorial, we are going to look at some tips and tricks to help make your first time pushing pixels go great.

So what is VJing? Here’s a throwback video that covers the term VideoJockey (VJ):

Like all crafts, time put into your process will show in person. If you only have 24 hours to prepare for a show, fear not! There are plenty of things you can do, but you might have to curb some expectations.

How much time do you have before your show? It’s good to know so you can plot out how you’ll spend that time leading up to it. The four major parts of this post dive into:

Pre-setup / Play / Learn

Teardown

Packing

Setup

Getting Ready:

In the book ‘How Music Works’ by David Byrne, the first chapter includes a section about different spaces for performing in; how sound fills a small space differently than a large space. The same is true for video and lighting. A single low-lumen projector can fill a small, dark room.

“Try to ignore the lovely décor and think of the size and shape of the space. Next to that is a band performing.” – David Byrne

Make sure you’ve got the right gear.

Correct cables, including adapters, power cords, and a power strip, ensuring they are long enough for the situation.

Use a USB webcam or iPhone as a live video input for crowd shots and live sampling.

Relevant tutorial: VJ Travel Kit: What's in our bag

Relevant tutorial: Multi-Channel Live Camera Video Sampler

Make sure your software is installed and runs as expected. (And if you have working software, DO NOT (highly recommended) update your operating system before the show. Big changes to your OS can lead to unexpected problems.)

Use a simple template with 2-4 layers as a starting point.

In VDMX go to Templates > Simple Video Mixer or 4-way VJ Starter

Relevant tutorial: Making a 4 channel video mixer in VDMX

Consider the space.

Figure out where you will set up your computer, the projector, and the projection surface.

While having a real projector screen is ideal, for your first gig, you might be hanging up a white sheet or getting creative with the surface you are projecting onto (such as the ceiling).

Relevant tutorial: Mastering Projector Rigging

Prep your content!

Download video clips for remixing (VDMX Sample Media).

Medium/longer-form videos (e.g., from archive.org) can also be useful footage; in/out points can be set on the fly to create impromptu loops.

Cut up and organize short loops based on themes that can be used for different songs/tracks.

Encode movies to HAP (or PhotoJPEG) instead of H.264, especially if you plan to scratch/scrub on movie time.

Install ProjectMilkSyphon as a backup app (ProjectMilkSyphon Documentation).

Live coding

If you have programming skills, some people use ‘live coding’ as part of their performances along with pre-made content.

Live coding can be an entire performance or one element of a larger setup.

Lots of different tools available for different programming languages.

Use Syphon or Window Capture to route visuals between apps.

Relevant tutorial: Guest Tutorial with Sarah GHP

Buy or borrow a MIDI controller if you can.

Live visuals are often best performed like an instrument.

An HID-supported gaming controller that can connect to a computer over Bluetooth or USB is a great alternative.

Relevant tutorial: Selecting the Ideal MIDI Controller for Visual Performances

DMX Lights

Nice if you can get them.

DMX lights can be expensive (here are some cheap alternatives: (RGBW 30W COB, Artnet adapter, RGBW Strobe) , but one or two basic lights are more than enough to fill a small space with color and easy to make part of your performance without spending too much time thinking about lighting design. The goal here is just for creating a basic accent color/strobe for high-intensity moments.

Keep in mind that bright lights in a small space can overwhelm your projection, so use lighting sparingly during your performance.

Relevant tutorial: Sending DMX Values from a VDMX Color Picker

Hide the privacy dot on external screens!

Apple shows an orange/green privacy dot on all screens when the microphone/camera are being used by apps. This is a fantastic privacy feature but is awful when projecting visuals using a second screen.

Fortunately, there is an official way from Apple to disable the privacy dot on external screens.

Relevant tutorial: Hiding the Orange Dot

Change your desktop picture.

It is usually a good idea to set your desktop picture for external screens to a solid black. (This way if you crash your visual app, the projector doesn’t show all of your embarrasing desktop icons and pictures of your cats).

Questions? Ask online! There are plenty of visualist communities happy to help newcomers get started. One of those places is the VIDVOX forums.

Show Design:

Got a fun idea? Run with it!

Don’t be afraid to tap into your friends and community and see who all is interested in helping build a set.

This is an example of how two projectors, painted cardboard, and frost shower curtains can craft them. No one is complaining about the cardboard when the lasers kick on and the dance party roars.

Pro Tip! An inexpensive frost shower curtain can act as a “rear projector screen.” It might have some wrinkles, but hey! that’s what all the packing tape is for! :) Plus they have grommets for easy hanging!

Performing:

Pro tip: Don’t go through your content too quickly!

Remember that your visuals are often the background for the music; you are making a music video. While you are hyper-focused on the exact number of times the same clip has looped, the audience is often paying more attention to the entire scene.

Have generative content available as a backup.

Use ProjectMilkSyphon in playlist mode.

Use audio-reactive ISF shaders and other generators.

Create a "pre-edited" video!

Create a long, pre-edited video that you can play and walk away from. Put some music on while you are experimenting at home and hit record on the movie recorder. Jam for an hour or so, keep the content cuts more consistent, less strobe-y, and now you’ve got a breather during your set.

Get the right brightness level for the space.

Edge detection FX

Difference blend modes

Masking FX (e.g., Remove Background, Layer Mask, Shape Mask)

Relevant tutorial: Masking techniques in VDMX

Be ready to ‘fade out’ the visuals.

In between songs.

During quiet/slow parts, so you can come back in heavy, e.g., the way the drums or other instruments do.

At the end of the show.

If you have a MIDI controller, sync a slider to the main output level in the Workspace Inspector > Layers.

Be ready to make things ‘go crazy.’

Effects such as Convergence or Strobe can add energy to the visuals at moments of high intensity, e.g., when the beat drops or the crowd is cheering.

Adjusting playback rate/scrubbing on movies.

Use audio analysis to drive FX parameters.

Relevant tutorial: More fun audio analysis techniques

Don’t be afraid to be silly.

Wear a funny costume.

Use memes and animated GIFs.

Try to match the mood and energy of the music.

Having a variety of styles and clips in your media library makes it easier to react to changes in mood and energy when performing with a DJ.

Relevant tutorial: Minimalism in VDMX

Relevant tutorial: Creating Video Feedback Loops on a Mac with VDMX

Next Steps:

Check our free ‘Live Visuals 101’ course materials (Live Visuals 101) that includes a combination of technical walkthroughs, case studies, and art theory to help you dive deeper into the world of VJing.

David Lublin and Steve Nalepa “Mild Meld” circa 2008

VDMX and macOS 26!

Hi everyone!

As usual we have a yearly update guide for people using VDMX who are looking to install the latest version of macOS on their computers.

VDMX6 Demo Project running in macOS 26 Tahoe

Should you update now?

Typically we recommend waiting to update your OS immediately when a new major release comes out, unless you have a good reason to be an early adopter. If you are in the middle of any existing important projects, you may want to wait, so that you don’t lose any time if you run into any problems.

So far we have not run into any issues with using the latest release of VDMX6 in macOS 26, but it you run into any problems, or just want to let us know about your experiences with Tahoe, please send us feedback using the Report Bug option from the Help menu in VDMX, or send an email to support@vidvox.net.

Make sure to check with the creators of any other software or plugins that you rely on to make sure they are compatible before updating.

Preparing To Update

Before performing a major OS update, it is recommended to:

Perform a full system backup (using Time Machine, or software like Carbon Copy Cloner)

If available use a second partition or hard drive so that you can keep a your old OS install if you need to downgrade.

Updating to Tahoe

To install macOS 26 Tahoe on your computer, launch the System Settings and go to the General > Software Update section.

Apple has an official guide to walk you through the process: macOS Tahoe Upgrade Guide

Recommended VDMX Gear Guide 2025

Building the Ultimate VDMX Rig: The 2025 Gear Guide

Ready to build or upgrade your live visual setup? Our comprehensive new gear guide has you covered. We dive deep into the most important choice for any VJ: the right Apple Silicon Mac. Discover why even a budget-friendly Mac Mini can be a powerhouse for standalone installations, and which MacBook Pro models are best for the most demanding shows.

But the computer is just the start. We also cover crucial pro-tips on which macOS version to run for maximum stability, our top picks for essential peripherals like external SSDs, capture cards, and Thunderbolt docks, and a complete checklist for your VJ travel kit. Whether you're a seasoned pro or just starting out, this guide has the recommendations you need to build a rock-solid VDMX system. [Click to read the full guide...]

Read MorePSNR score comparisons for commonly used real-time video codecs.

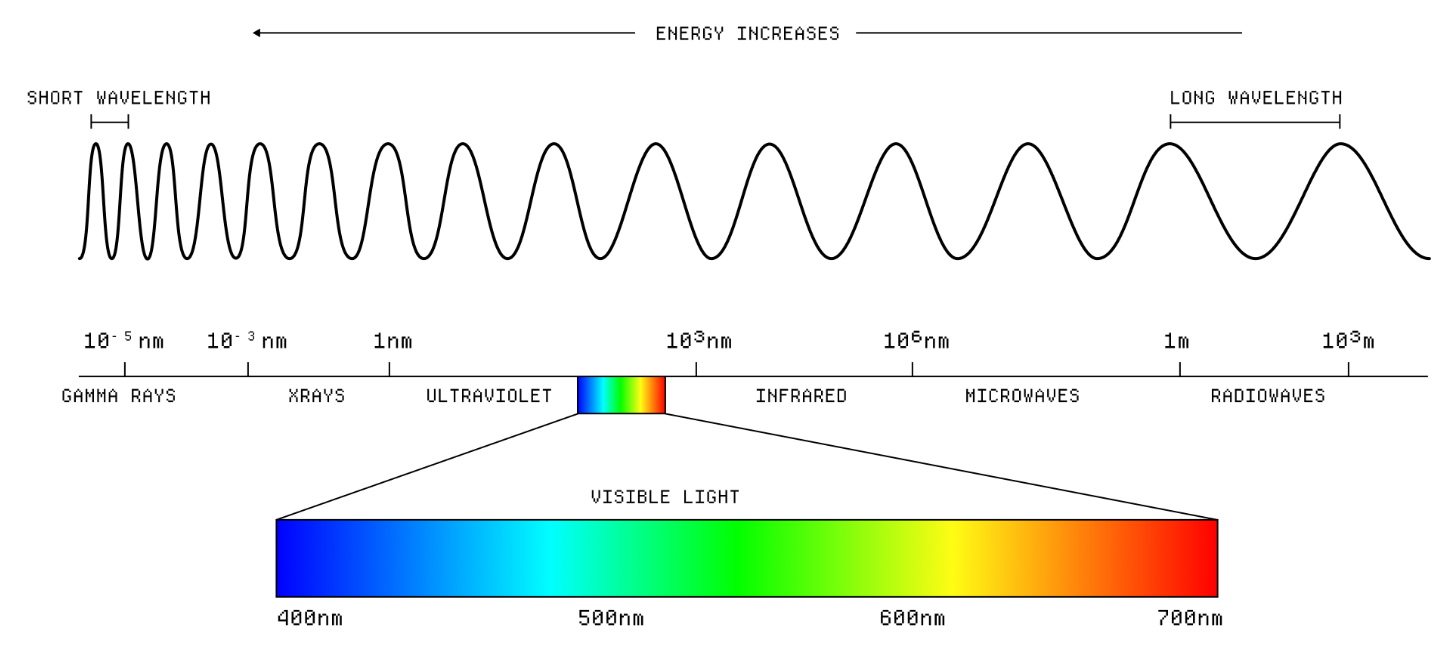

Color Spaces, Compression Quality Metrics, and the Human Eye

In a recent blog post we dove into the results from various quality metrics comparing HAP alongside other video codecs. While we ran all of our tests using PSNR (Peak Signal-to-Noise Ratio), SSIM (Structural similarity index measure) and VMAF (Video Multi-Method Assessment Fusion), we did not go into much detail about what each of these three metrics actually mean and how they are used to evaluate the quality of image compression.

As a recap of those metrics:

PSNR: Peak Signal-to-Noise Ratio, measures full color quality across all channels. Measured in db, higher values are better.

SSIM: Structural similarity index measure measures structural similarity between images, considering luminance, contrast, and structure. Values range from 0-1, with 1 being identical.

VMAF: Video Multi-Method Assessment Fusion is Netflix's perceptual quality metric that correlates with human visual perception. Scores range 0-100, with higher being better.

Computing PSNR scores.

Whereas PSNR measures a direct numerical comparison of how lossy an image compression is, the SSIM and VMAF metrics take into account perceptual qualities such as structural similarity and contrast levels. While PSNR values are extremely useful, SSIM and VMAF are more tuned to how the human eye actually perceives color and brightness information. A high PSNR score doesn’t always mean high quality image compression, especially in real-world situations.

Fortunately in the case of HAP R we saw improvements in PSNR, SSIM, and VMAF compared to HAP and HAP Q, showing that it is a huge step forward in quality on all front, with the trade off being slower compression times because it is more computationally expensive to encode.

A deeply related topic are the different models and gamuts used to represent color information. While we tend to think of colors being used within an image processing pipeline such as VDMX as representing every pixel as an RGBA value, behind the scenes there is a lot more going on to make sure that the colors captured by your camera appear on screen the way you expect it to.

The Making Software blog (Written and illustrated by Dan Hollick) has a wonderful write up going into detail of ‘What is a color space?’, with an in depth lesson on ‘color’ as we know it exists in the real-world (wavelength of light), how those wavelengths are interpreted by our eyes (with three sizes of ‘cones’), how it is represented on your computer display (as pixels), and all about the different ways that colors are stored digitally to be optimized for different gamuts (ranges) of color.

“Digital color is about trying to map this complex interplay of light and perception into a format that computers can understand and screens can display.“

From Dan Hollick’s ‘What is a color space’ blog post, What is color? illustration.

While reading through the Making Software post, keep in mind how details like this come into play when talking about measuring compression quality with simple error based metrics like PSNR vs perceptual metrics like SSIM and VMAF,

“There is also a non-linear relationship between the intensity of light and our perception of brightness. This means that a small change in the intensity of light can result in a large change in our perception of brightness, especially at low light levels. You can see this in action in these optical illusions, where the perceived brightness of a color can change based on the surrounding colors.”

Optical illusion from ‘What is a color space’ – perceived brightness of a color can change based on the surrounding colors.

Another interesting historical example of color space usage based on technical requirements comes from the transition from the pre-digital black & white to color television is the YIQ colorspace for the NTSC standard. From the wikipedia page on NTSC,

“NTSC uses a luminance-chrominance encoding system, incorporating concepts invented in 1938 by Georges Valensi. Using a separate luminance signal maintained backward compatibility with black-and-white television sets in use at the time; only color sets would recognize the chroma signal, which was essentially ignored by black and white sets.

An image along with its Y′, U, and V components respectively (wikipedia)

The red, green, and blue primary color signals (R′G′B′) are weighted and summed into a single luma signal, designated Y′ which takes the place of the original monochrome signal. The color difference information is encoded into the chrominance signal, which carries only the color information. This allows black-and-white receivers to display NTSC color signals by simply ignoring the chrominance signal. Some black-and-white TVs sold in the U.S. after the introduction of color broadcasting in 1953 were designed to filter chroma out, but the early B&W sets did not do this and chrominance could be seen as a crawling dot pattern in areas of the picture that held saturated colors.”

While YIQ is not actually commonly used beyond NSTC, you are more likely to run into YUV, or one of its other variations. In fact, the HAP Q codec makes use of a similar colorspace, YCoCg, using a shader to quickly convert the pixel data to and from RGB as part of the pixel pipeline.

When we are talking about color representations in digital image / video codecs, the choice of a color space is part of the design considerations. When storing pixel data as YUV, more data is stored for the luma level than the chroma values when compared to RGB based codecs. This typically results in slightly lower color accuracy with the benefit of improved brightness and contrast accuracy. As the human eye is more sensitive to differences in brightness levels, it can be beneficial to use color spaces that are more tuned to these qualities in some cases. Similarly, when creating metrics that help us evaluate how noticeable image compression is to the human eye vs how well it performs numerically, these types of characteristics are taken into account. The amount of time it takes to convert to and from RGB is also something that needs to be considered when optimizing compression algorithms for real-time usage.

Beyond all this, oftentimes certain image processing FX may use a different color space for a variety of different reasons. For example, performing a hue shift is a difficult operation to perform using RGB values, but very easy to do when working in an HSV or HSL values. If you look at the code behind the Color Controls ISF, you’ll notice that each pixel is converted from RGB to HSV, adjusted based on the input parameters, and then converted back to RGB for the output.

Basic GLSL code from Color Controls.fs for converting RGB to HSV and HSV to RGB.

The end of Dan’s blog post on color spaces sums it all up very nicely,

“So what have we learned? Digital color is a hot, complicated mess of different color spaces, models, and management systems that all work together to try to make sure that the color that gets to your eyeball is somewhat close to what was intended.”

What more could we ask for?

VDMX 6.2 Update: Pop over previews, directly capture audio from other apps, and more!

The VDMX 6.2 update is here! Directly capture audio from other applications, bundled ProjectMilkSyphon, improved fullscreen output panel, pop out previews, and more!

Read MoreAkra Kinari Performance in Sydney Harbour

Grey Filastine & Nova: Akra Kinari

"Our work has always spoken about the disfigurement of nature... but there was still way too much use of polluting airplanes," explains musician and multimedia artist Grey Filastine. Faced with this creative and ethical dilemma, he and his partner Nova embarked on an audacious journey: they turned a traditional sailing vessel into Arka Kinari, a carbon-low, seaworthy vessel for their audiovisual performances. Part art installation, part tour vehicle, and part home, the project represents a complete fusion of life and creativity. We sat down with Grey to discuss the genesis of this incredible undertaking, from the practical need for better sound systems in remote Indonesian islands to the grand vision of a truly sustainable artistic practice. Read on for a look inside this "tiny floating universe."

Read MoreHAP R Benchmarks

It has been just over TWELVE YEARS since the initial release of the HAP video codecs, and in this blog post we are going to be diving into the details behind the newest addition to the HAP codec family – HAP R, an ultra high quality GPU accelerated codec.

In these benchmarks we’ll be looking at how HAP R compares to the other HAP codecs in metrics like image quality and file size, and discussing some of the technical details for those who are curious.

Read MoreVDMX6 Update – Video Tracking, OCR, Scopes, Color Transfer and more!

Today we are extremely excited to announce the first big round of updates to VDMX6 that begin to fully take advantage of our new Metal based rendering engine introduced last fall. This release adds a collection of powerful video analysis based plugins and FX built on top of Apple’s CoreML and Vision frameworks to help people bring their visuals to the next level.

New data-source plugins:

Video Tracking

Faces, hands, and human bodies can be detected and their positions published as data-sources for controlling parameters throughout VDMX. It can also be used to generate masking images and visualizations that can be previewed and used as part of the video processing pipeline.

Scopes

The Scopes plugin generates and displays a variety of useful visualizations of the color data for a provided video stream including waveform and vectorscope modes.

OCR

Scan images for text and QR codes, publishes detected strings as data-sources to use with text fields, text generators, and anywhere else strings can be received.

New FX:

Color Transfer FX

Automatically shift the color and brightness levels of a video stream to match a reference image.

Remove Background FX

Instantly remove the background (or foreground) of a video stream using person segmentation.

More FX:

Blur and Overlay Faces FX: Uses face tracking to blur, pixellate, and overlay images on top of faces.

Segmented Color Transfer: Segmented version of the new Color Transfer FX that allows for selecting different references for the foreground and background. (macOS 14+ only)

New Segmented Blur FX: Applies different levels of blur to the background & foreground of a video stream. (macOS 14+)

You can see these features in action in the new set of video tutorials:

or try out these features directly in the VDMX6 demo with the bundled example setups that can be loaded from the Templates menu.

SONICrider “painting with sound”

Who is SONICRIDER - Jurgen Winkel?

Jurgen Winkel

Artist – Producer – Musician – Performer (SONICrider, BLIND, LSDice, The Phonons, …)

Who are you, and what do you do?

I’m Jurgen and in love with sound and vision as long as I can remember, when I hear sounds I “see” visuals and when I see visuals I “hear” sounds.

Sound wise I started recording music from the radio with a cassette recorder on the primary school and it became a collection where synthesizers played an important role, Pink Floyd was the favorite. The next step, record little parts of the recorded music on a second cassette recorder, modulating the speed and the love for sounddesign was born.

Visual wise I got fascinated by natural “art”: sleeping outside for the first time as a kid discovering the stars and universe, walking along the sea studying the rhythm of the waves, traveling by train while the world passes by. Later on I cooperated this fascination creating visuals where the natural flow is the red line.

During my study in Eindhoven I met a guy who performed with poems; I told him that I heard sounds in my head supporting his words. The band BLIND was born and became a industrial, rock, electronic duo band: we toured from mid eighties till mid nineties in Europe, released a couple of albums and live we used 8 mm movies (filmed by us) as part of the stories we told.

BLIND live

Beside BLIND I began sound design for local artist, dancers and movie makers, slowly I got a massive sound archive that I didn’t use for the projects. Creating tracks using that archive became SONICrider.

As SONICrider I use modular and DIY gear as main instruments creating from ambient till experimental techno: when I play live I use visuals like we did with BLIND. I constructed my modular gear like members of a band: drums, bass/guitar, synth, percussion, sounds, each case can be used stand alone when I do collaborations:

– LSDice: experimental acid/dance (David plays 2 MC 505’s)

– The Phonons: ambient jazz (Siem plays a bass guitar)

– Gijs van Bon (a light artist): I create live sound touring with his installation where a 2 weeks visit on Taiwan playing at a light festival was a fine high light.

– Several one time collabs.

Since the C-virus ruled the world I zoomed in on (which I did occasionally) creating visuals, telling stories. Some of those visuals are presented at festivals around the world where I as musician probably never would have attended in person.

Modular music is very suitable making (ambient) drones that exceeds regular playtime: it was hard to find a label releasing this tracks. So I started EAR (Emotional Analog Resonance) and at the moment there are 33 monthly releases done.

Living from the music I create is sometimes hard so projects like mixing (sometimes live), mastering, lessons in synthesis and organizing modular events are a welcome add.

What tools do you use?

It all started with 2 cassette recorder and tools to manipulate the speed. The machinery I used in BLIND was a Roland 909, 2 AKAI samplers (one with keyboard and midi), Roland drum pad and a Roland Juno 60.

Hardware stayed the main source creating most of my music where working in the box mostly is mastering tracks. In the box arrangements as “commissioned art” are a second path using Ableton manipulating recorded pieces from my hardware or field recordings.

SONICrider live

Searching for a visual ad while playing live I use Ableton, VDMX and DMXis (light control); these visuals are part of the expression, “the story I tell”. Using VDMX opened a world of opportunities manipulating the visuals with the live sound: via an UAD interface I use sound filters to control VDMX. The visuals move from abstract to real material all shot by me. “Although electronic music uses sampling a lot, I always use own material, it feels like it should be”.

VDMX pushed me in a creative direction to start with a visual and add sound/music as a “soundtrack”, slowly entering the world of short movies, an exciting exploration.

The mentioned hardware is a collection I built over 40 years and in the studio the early gear is there still working well. Beside Tape recorders, Moog synths, other hardware (drumcomputer, synths, DIY instruments), modular gear is the core. The cool thing about modular gear is the fact you can built a new instrument for each project like a painter creates new colors.

“The Sound And Vision Lab” in the center of Eindhoven is the homebase creating music and visuals, carry out mix and master jobs, coach and teach: sounddesign, synthesis, Ableton, VDMX and creative thinking. The studio is ready all the time to stream a live performance solo or with friends/musicians.

The Sound And Vision Lab

Tell us about some of your recent and upcoming projects!

I recently released an album on ” Cyclical Dreams (Argentine)” called ” A Mental Reset”. This second album of a trilogy drive the mind into a reset “we need to act to keep our planet a healthy, peaceful and safe place. The first album of this trilogy (also released by Cyclical Dreams) called “ELAPSUS” or “escaping” which we try but cannot. The last of this trilogy (2027) “Future Proofed” will be created in thoughts over the coming months and then converted into tracks.

SONICrider – A Mental Reset

Most of the tracks/albums by SONICrider are released indecent on the SONICrider label via Bandcamp or via small third party labels around the world. I released over 300 tracks and have the feeling this never stops 🙂

Playing live is special and give energy like nothing else, so on regular basis a selection of gear hits the road playings gigs/festivals in Europe. A special trip was a 2 week tour in Taiwan playing live sounds as past of a light art work where water, light and sound meet.

And yes, visual releases using VDMX is an ongoing path, sometimes as a (live) stream from “The Sound And Vision Lab” or as prerecorded movie for festivals around the world.

SONICrider – Deconstruction Of A Dream (for the Cyclical Fest 2024)

Every now and then SONICrider plays a live set on a local radio as the start of a “electronic radio sow” and on a more regular basis I organize modular events in Eindhoven. This foundation “Modulab Eindhoven” has asmission “Liberating Electronic Music”, during the afternoon there are workshops, at the evening 3 performances by international artists and in between some vegan food.

As mentioned before EAR established by me, is a label ocussing on non-commercial electronic music. EAR releases should take listeners on a journey of experimental, industrial, ambient soundscapes where the playtime exceeds “regular” releases.

What drives me?

Finding a balance between the past and the future where the now is key. Caring and sharing a peaceful, healthy and save place for everybody no matter race, religion, gender, age by listening what others thinks, having respect for other opinion and be yourself. Life is short. Act you should!!

All my life I feel sad when violence hits people and unfortunately “history repeats itself” war is around us and in Europe a topic…. As an artist I can contribute with music supporting those who suffer as I human being I try the stand for Peace, Love, Unity and Respect #PLUR.

Release by SONICrider supporting Ukraine:

Entering the ’60 (age wise) I keep on doing what I did the last 40 years, expressing my view via music by “Painting with Sound”.

Take care, Jurgen

Some Links:

– SONICrider website

– SONICrider YouTube

– SONJCrider Linktree (all links)

– EAR label

ISF for Metal – Now open source!

Today we are excited to announce the open source release of our latest codebase for working with Metal and rendering ISF shaders on macOS: ISFMSLKit

This codebase was initially written for the VDMX6 update last fall, and is now ready for use by other developers who are looking to support ISF in a Metal environment on the Mac. Along with this we’ve also open sourced VVMetalKit, our general purpose Metal framework which powers the rendering engine in VDMX6 and other utility apps.

What is ISF?

Before we dive into ISF for Metal, let’s do a quick review of the ISF specification itself.

ISF, the Interactive Shader Format, is a cross platform standard for creating video generators, visual effects, and transitions. It was originally created for use in VDMX in 2013, and is now supported in over 20 different apps and creative coding environments.

ISF files contain two main components:

JSON Metadata

GLSL Code

These are used together by host applications to provide user interfaces to control parameters within the rendering pipeline.

An example ISF generator as code and as a source in VDMX.

The ISF documentation pages include a Quick Start overview, an in depth look at writing GLSL code for real-time visuals, and useful reference notes.

Getting Started with ISF in Metal

This new open source release has three main repositories:

ISFMSLKit: ISFMSLKit is a Mac framework for working with ISF files (and the GLSL source code they contain) in a tech stack that uses Metal to render content. At runtime, it transpiles GLSL to MSL, caches the compiled binaries to disk for rapid access, and uses Metal to render content to textures. Everything you need to get started with ISF shaders in a Metal environment.

VVMetalKit: VVMetalKit is a Mac framework that contains several useful utility classes for working with Metal, such as managing a buffer pool, rendering, populating, converting, and displaying textures.

ISFGLSLGenerator: ISFGLSLGenerator is a cross-platform c++ lib that provides a programmatic interface for browsing and examining ISF files as well as generating GLSL shader source code that can be used for rendering. This library doesn't do any actual rendering itself - it just parses ISF files and generates shader code.

These frameworks are the same code that we have running under the hood in VDMX6 and are free for anyone to use in their own software.

The ISFMSLKitTestApp running the Color Bars generator being processed by the Kaleidoscope filter.

All of the code needed for working with ISF in Metal can be found in ISFMSLKit.

This repository has several dependencies that are included, such as VVMetalKit and ISFGLSLGenerator.

The ISFMSLKitTestApp demonstrates the entire process of working with ISF under Metal:

Converting GLSL to MSL.

Caching converted shader code.

Loading, validating, and parsing ISF files.

Rendering ISF shaders to Metal textures as generators and FX.

Displaying the final image in a Metal view.

The three major classes you’ll be working with in ISFMSLKit are:

ISFMSLDoc: This class is a programmatic representation of an ISF document, and can be created either from the path to an ISF document or by passing it strings containing the ISF document's shaders. It performs basic validation, and parses the ISF's contents on init, allowing you to examine the ISF file's attributes. Behind the scenes, this class is basically a wrapper around VVISF::ISFDoc from the ISFGLSLGenerator library.

ISFMSLCache: This class is the primary interface for caching (and retrieving cached) ISF files. Caching ISF files precompiles their shaders, allowing for faster runtime access.

ISFMSLScene: A subclass of VVMTLScene used to render ISFs to Metal textures.

Detailed information on these classes and how to use them can be found in the ISFMSLKit readme file and documentation.

How does ISF in Metal work under the hood?

As ISF v2 is an OpenGL / GLSL based specification, in order to get ISF files working in Metal, first they need to be transpiled from GLSL to MSL. In a traditional OpenGL pipeline, behind the scenes ISF looks essentially like this:

This is where ISFMSLKit and SPIR-V come into play. SPIR-V is an intermediate format from The Khronos Group for converting shaders from one format to another. To convert from GLSL to MSL, this happens in two parts:

GLSLangValidatorLib which converts GLSL to SPIR-V.

SPIRVCrossLib which converts SPIR-V to MSL.

These are provided as precompiled binaries in ISFMSLKit to reduce compilation times, but the source is also available if you are curious to see how they work, or you'd rather build your own.

Our updated diagram with the conversion to SPIR-V and MSL in place of GLSL is now something like this:

When using ISFMSLKit inside of VDMX6 or the included ISFMSLKit sample application, on launch, every unconverted GLSL-based ISF file is first converted to MSL, and then tracked for changes during runtime. The original ISF is left intact. Error logs are generated for files that fail to compile or translate for whatever reason. Files that successfully transpile are cached for later use.

Example error log for a GLSL shader that fails to compile.

The process demonstrated in the ISFMSLKit repository is also a great starting point for developers looking to convert ISF files to other shader languages for other platforms, or as a reference for anyone looking for an example of SPIR-V in action.

Once the ISF files have been converted from GLSL to MSL, they are ready to be validated, parsed for input parameters, and rendered by the host application using ISLMSLKit or your own custom library.

In addition to providing the base for the ISF rendering classes in ISFMSLKit and its sample application, the new VVMetalKit contains a variety of general purpose utilities for working with Metal. New and experienced developers alike will find this framework useful when creating Metal based video apps on the macOS.

Learning more about ISF

More information about ISF can be found on the isf.video website and examples can be found on the ISF Online Editor.

The set of open-source ISF files that is bundled with VDMX has over 200 shaders including useful utilities, standard filters, and a wide variety of FX for stylizing video, is completely free for other developers to use.

We are always excited to hear from people using ISF – if you have any questions, feedback, or just want to send us some cool links to your work for us to check out, please send us an email at isf@vidvox.net.

Developers who add support for ISF to their own software can send us a URL to their website and png icon to include on the list of apps that support ISF.

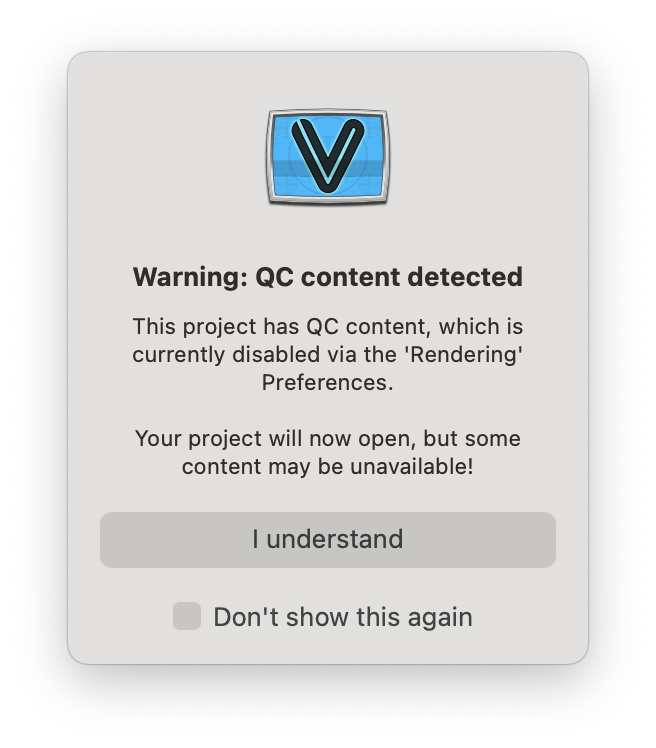

Sunsetting Quartz Composer in VDMX6

For over a decade, one of the most valuable tools in the Mac VJ toolkit has been Quartz Composer (QC). It empowered countless artists to create interactive visuals with a drag-and-drop approach that felt revolutionary. However, today it is with a heavy heart that we announce the official start of phasing out support for Quartz Composer in VDMX6 over the next several months as we replace the functionality with other options.

A Bit of Background

Quartz Composer debuted in 2005 as part of Apple’s Xcode suite, offering a node-based visual programming environment ideal for real-time video manipulation and generative art. It became a staple tool among VJs and multimedia artists, thanks to its seamless integration with macOS and its ability to work harmoniously with platforms like VDMX. However, QC's reliance on OpenGL, which Apple is now phasing out in favor of Metal, has created mounting challenges. Apple has stopped updating QC, leading to compatibility issues with each macOS release and causing developers to reconsider its long-term viability.

We had hoped that QC might survive a bit longer, but it’s time to acknowledge the technical hurdles. The majority of recent crash logs we’ve seen are directly tied to the OpenGL-to-Metal bridge required to keep QC functioning within VDMX6. This growing instability has made the transition away from QC a top priority for us.

What's Changing in the Short Term

QC Disabled by Default:

Quartz Composer support is now disabled by default in VDMX6. If you still rely on QC compositions, you can temporarily re-enable it by going to Preferences > Rendering.

Opening Existing Projects with QC:

When opening a project that uses QC compositions, you’ll see a warning letting you know that QC support must be turned back on to proceed.

Beyond That

Text File Playback:

VDMX6 still relies heavily on Quartz Composer for text file playback.

Replacing QC usage in this area is a top priority.

In the meantime, you may need to enable QC support to take advantage of this feature.

VDMX6 Plus Alternatives:

VDMX6 Plus users can switch to Vuo or TouchDesigner for more advanced text rendering options.

New Built-in Effects:

We’ve added alternatives to popular QC effects, starting with:

Blur Faces

Remove Background

Rutt Etra

Let us know what other QC-based assets you’d like us to prioritize in future releases!

A Final Goodbye to Quartz Composer

Quartz Composer gave us so many unforgettable moments, empowering visual artists around the world with its unique approach to generative art. But all good things must come to an end. If you’ve been a long-time fan like we have, please join us on the forums for a little memorial service and share some of your favorite QC memories with the community. Let’s celebrate all the incredible art QC made possible and look forward to what the future holds!

With change comes opportunity, and though it’s tough to say goodbye, we’re excited about the new possibilities that modern tools like Metal, Vuo, and TouchDesigner are bringing to the world of visual performance. Thanks for being on this journey with us—we can’t wait to see what you’ll create next!

Announcing VDMX6 and VDMX6 Plus!!!

Hello VDMX Fans!

We’re thrilled to announce the official release of the biggest update to VDMX in over a decade: VDMX6!

What’s changed? Oh, just the entire rendering engine.

VDMX6 is now powered by Metal, fully transitioning away from the deprecated OpenGL technology. This update not only prepares us for the future of Mac hardware and OS updates but also opens new doors for upcoming features, improvements, and even more optimizations.

What’s else is new?

Read on! Looking to download the new release or buy a license? Visit the new Vidvox.net homepage!

HAP R

One of the first new benefits of our new Metal rendering engine is we can add support for the latest addition to the HAP video codec family!

Following in the footsteps of HAP Q, we now have HAP R.

What do you need to know about HAP R?

The HAP R format creates files that are higher quality than HAP Q, with alpha channels, at about the same size.

The latest AVF Batch Exporter utility app in the Extras folder includes support for importing / exporting to the newest variation of HAP.

HAP R is more technically known as HAP 7A because it is based on the BC7 texture format.

Other developers looking to update their own software to support HAP R will find technical details of the format on the HAP Specification and the HAP in AVFoundation framework GitHub pages useful in getting started.

Stay tuned for more details and benchmarks on HAP R in a future blog post!

Updated Automatic BPM Detection

Along with overhauling the rendering engine we’ve rewritten the tempo tracking algorithm completely from scratch to run natively on both Intel and ARM64 processors. No more having to use Rosetta to take advantage of this crucial feature on newer Mac hardware!

Leaner and Cleaner

Along with the performance boost of switching the rendering engine over to Metal we’ve optimized several other core parts of VDMX, making the app more efficient with an overall lower memory footprint.

New Pricing and Plus Version

One of the top things we hear from people who want to get started in working with live visuals is the financial barrier to entry. Along with adding feature improvements we understand that one thing we can do to make our software better is to make it more accessible to everyone and anyone, so we’ve decided to set the price on VDMX6 at 199 USD.

We have also introduced VDMX6 Plus at 349 USD for users who want to extend their experience by using TouchDesigner and Vuo compositions alongside the rest of the powerful features included in the standard version.

Students, teachers, and hobbyists can apply by email for our educational discounts of 50 USD on VDMX6 or 100 USD for VDMX6 Plus.

Upgrade Discounts

Existing VDMX5 users who purchased their license after September 12th, 2023 are entitled to a free update to VDMX6 Plus.

All other VDMX5 users can write in to receive a coupon to get 50 USD on VDMX6 or 100 USD off on VDMX6 Plus.

Customers who have a license of VDMX6 can get the full price off of their original purchase if they choose to upgrade to VDMX6 Plus later.

TouchDesigner Support!

Using TouchDesigner compositions in VDMX6

Yes indeed, as mentioned just above, in VDMX6 Plus you can now use TouchDesigner compositions as interactive generators, effects, and control data plugins.

Note: that using TouchDesigner compositions in VDMX6 Plus requires a TouchDesigner install, and a valid paid TouchDesigner license on the same computer (Education, Commercial, or Pro) Non-commercial will not work.

Example compositions can be found on the VDMX6 dmg in the Extras folder and additional detailed information can be found in the VDMX documentation.

New ISF Generators and FX

No update to VDMX is complete without some new interactive video generators and FX to play with!

In this release we’ve got a new category of FX, Overlays, which include useful FX such as Doodler, Cursor, and Highlighter. These make it easy to add small elements on top of your video without having to do additional layer management.

There’s also some other new fun audio visualizers and glitch FX to play with:

Audio Waveform Shape

Pattern Glitch

Stylize Glitch

Improvements to BlackMagic and Video Capture Support

BlackMagic makes some of the most popular audio / video capture devices on the market, and we’ve just made using them with VDMX even better…

Use BlackMagic devices for simultaneous input and output, also known as full duplex mode, on supported devices.

Send audio streams to BlackMagic outputs.

Lower latency audio capture from BlackMagic inputs.

Apply key streams as alpha channels on supported devices.

Output alpha channels as external key streams on supported devices.

Themes and Interface Improvements

Find your favorite FX and sources even faster.

A major overhaul like this also deserves some new style, so we’ve included some new color themes and various minor interface tweaks throughout the app to improve overall usability.

One of the most useful UI additions is the new ‘Search’ field for quickly filtering the options in the layer source & FX menus.

Base and Recommended System Requirements

Minimum requirements: macOS 12; Intel or ARM64 Mac

Recommended OS: macOS 13 or later; M1 processor or later.

Sadly, we had to drop support for older OS releases where that could not take advantage of certain features and optimizations to Metal that were introduced in macOS 12. But we will continue to host old versions of VDMX5 to support older systems. See (VDMX Versions for details).

While VDMX6 will run on macOS 12 and Intel machines, the biggest performance gains are found in the newest OS releases and hardware where Metal itself is more optimized. We strongly recommend updating to macOS 13 or later where possible.

The Future???

With so many changes behind the scenes to create a stronger foundation for us to build on, one of the most existing parts of the VDMX6 update is what will come next… make sure to keep your eyes on this blog, tag and follow us on Twitter, YouTube, and Instagram, and sign up for our email list to get the latest news from VIDVOX!

Updating from VDMX5? Read the FAQ!

We tried to make the process of updating from VDMX5 as seamless as possible: for example opening existing project files will create a copy that will be automatically updated to for the new version and GLSL based ISF shaders will be automatically transpiled as Metal shaders on launch.

Beyond that, we know that people updating from older versions have a ton of specific questions about the update process and we have created a VDMX5 upgrade FAQ page on this topic in the VDMX6 documentation. This page includes a listing of major changes to be aware of, and if needed we are also happy to answer questions you may have by email or on the forums.

With VDMX6 moving into Metal, some older plugins, custom FX, or ISF shaders may not be immediately compatible with the latest version. We recommend testing out the demo of VDMX6 first before making a full transition. If something is incompatible VDMX will do it’s best to give you a warning. And the best part is, you can have VDMX5, VDMX6, and VDMX6 Plus all installed on the same machine without conflict!

Codie in Madrid, 2019, photo by Westley Hennigh-Palermo

Interview with Sarah GHP!

For this artist feature we are joined by software developer and live video performer Sarah Groff Hennigh-Palermo to talk about how she got started with feedback loops, finding your own aesthetic style, and a whole lot more. You can also check out her guest tutorial that takes an EXTENSIVE behind the scenes look at her current Eurorack and live coding rig!

Who are you and what do you do?

My name is Sarah Groff Hennigh-Palermo, but since that is very long, I usually go by Sarah GHP.

(Early Codie Stills, 2019)

I do live visuals with my bands Codie (me & Kate Sicchio), Cable Knit Sweater (me & David Miller), and Electric Detectives (me, as Hue Archer, & Nancy Drone). Codie is more live code focused, with Kate and I building up a set from scratch with code, whereas Cable Knit Sweater is more modular focused, and David and I share signals across our rigs. Electric Detectives is somewhere in the middle. And then I make more fixed video pieces, from silent sketches to scored collaborations, like Pleco.

I also wrote a zine manifesto about computer-critical computer art with the folks at Nonmachinable that I am very proud of.

I focus a lot on improvisation and bright, gritty textures, and a lo-fi aesthetic. For me, this is a form of aesthetic resistance to the way computers are being deployed in the world. When we look at all the cute, dirty things old computers can do, or when we look at the neat things we can invent with open technology, we start seeing alternatives to all the smooth, coercive technology the world is filling up with.

What tools (hardware, software, other) do you use in your creative / work process?

Cable Knit Sweater in Nevers, France, 2022, unknown photographer

My primary tools are La Habra, a Clojurescript + Electron framework I wrote to live code SVGs; VDMX; and a Eurorack case with the LZX TBC2 and LZX Memory Palace inside, along with other useful modules. I also have a Fairlight CVI, a digital-analog synth from the 1980s, although I do not perform with it because it is gigantic! The Memory Palace is inspired by the CVI and although it is a lot more limited, it is a lot more portable.

Sometimes I also use Signal Culture's applications, especially Framebuffer and Interstream, or write one-off manipulation tools using p5.js.

To transform video signals throughout the system, I use a lot of Blackmagic converter boxes. (I go into these in more detail in my how to.)

A Blackmagic Hyperdeck is useful to record both computer and synthesizer output. Gitlab saves my code, so I can remember where an improvisation ended at least.

An old CCTV camera or two are nice to have on hand for rescanning, which can be either a useful replacement a series of converters or used to capture glitchy footage that a converter will overcorrect. For people interested in video signals, I found them confusing for a long time, so I wrote up my notes, which might be helpful.

In your guest tutorial you describe how all of the various pieces of gear and software you are using all fit together, such as different techniques for video feedback. How do you go about trying out new things to find your own unique visual style and do you have any tips for people just getting started?

Sarah GHP in San Francisco, 2023, photo by Westley Hennigh-Palermo

Hm, that is a very good question. I think I was very lucky in that I had a definite aesthetic in mind when I started. It has changed a bit, but having a direction to aim for was helpful. I knew I loved two-dimensional polygons, a pop approach to color and pattern. That is partially nature — I just think about aesthetics a lot — and partially nurture — I grew up in Southern California, which is a land blessed by color and kitsch and fun, and then spent years in every modern art museum I could. So the Disneyland meets Lygia Pape vibe already was deeply embedded into my imagination.

Then in terms of further exploration, I would say I have a pretty constant push and pull between deliberation and happy accident. When I first started doing live code shows, every time I would encounter something I liked out in the world, in a museum or an ad or at a show, I would try to think about what a similar thing in La Habra would be like. Since I was trying to practice every day at that point, I could usually try it out pretty quickly and see what I liked. But then deliberation ended — I did not think about trying to capture it all — and I am sure I forgot like 50% of what I found out, but that is fine with me. If it is important, I will find it again.

Discovering feedback as a tool also worked in a similar accident–deliberate–accident structure. I had applied for a residency at Alfred University with a former member of Codie and then she couldn't go, so I went alone and spent the entire time working on video stuff. (Accident.) They had a Fairlight CVI and when I was running through a bunch of modes, I happened to hit one of the feedback presets when running a video of my work that had a background which just happened to match the chroma-keying, and the footage was amazing. (Accident.) But I couldn't replicate it the next day, because I did not understand it. So I went through all the modes (with the help of my husband Wes, who often serves as a studio assistant) and captured what I could to take it apart and try to understand it at home. This has taken years, and the purchase of a Fairlight, and I would say I understand a lot more. (Deliberation.) Then I do another round of improvisations and explorations where I might start with a question or intention, but inevitably get sidetracked or make a mistake, and then open up a whole new vein. (Back to the accidents.)

(La Habra + Interstream, 2022)

And then VDMX was the same. I started using it to route Syphon signals, tried out some effects for fun and had some quick wins, and was baffled by others. So I took some footage from an improvisation and systematically applied every effect in VDMX to it. Not only did I write down notes here, but I recategorized all the effects in my notebook by how they affected the kind of footage I make.

So have a goal, have a bunch of happy accidents, and then try to understand things so you can recreate the accidents. You will have more accidents and grow your list of things to understand.

For me, too, understanding actually means two things. It means understanding technically what is happening but also understanding where the outcome lives, aesthetically or art historically. For instance, I find it useful to be able to think about gritty visual dislocation and feedback in terms of, say, 90s design and Raygun magazine. This gives you pointers as to where to look for more inspiration and can help direct collaboration. The typography in my zine with Nonmachinable comes directly from this.

So, I guess my advice is:

Look for your starting point. What do you actually like? What do you like that isn't what everyone else likes? Being a bit of a hater (in your head!) is also a good way to find a starting point. Don't be mean, but it is useful to know what you do not want to do, especially if you aren't sure what you want to do.

Go out and fuck up a lot.

Find a balance between exploration and deliberation. Don't try to save everything because you will drive yourself mad.

Focus on understanding and avenues will open up around that.

Tips / suggestions for people looking to find live code shows / getting more into that community?

Codie as astronauts in Limerick, 2020, photo by Antonio Roberts

If you are in New York City or nearby, then I can recommend Livecode.NYC wholeheartedly. It is a friendly community, which puts on a ton of shows, often at Wonderville, in Bushwick.

Otherwise, TOPLAP has an event calendar, a list of nodes, and a Mastodon instance. Once you start getting to know folks from one scene, it is pretty to meet those from others.

Tell us about your latest round of touring in Europe and the US, and anything coming up next that you'd like to hype!

Sarah in Berlin, 2023, photo by Westley Hennigh-Palermo

Ha, well I am not sure I would call anything a tour. I am always trying to balance wanting to play more and having a day job and other hobbies, like swimming and improving my German.

I got my start in the US live code scene, being around for the early days of LivecodeNYC, so when I was back in the States in December I played with the SF A/V Club and then did a chill evening with my New York friends. Every city's live code scene is different and something I really treasure about New York is that there are still corners where the experimental DIY vibe can flourish.

And speaking of good vibes, in May, I did a talk, anti-AI meme workshop and Cable Knit Sweater performance at Art Meets Radical Openness, a biennial conference run by Servus.at in Linz, and then played with Codie at Blaues Rauchen in Essen. Both were impeccable.

Coming up, I will teaching a workshop at NØ SCHOOL NEVERS, a summer school in Nevers, France, and then Cable Knit Sweater will play again at the festival on the last weekend, July 12 to 14.

After that, not much planned for performances, but in the future, I really would like to do some durational — 6- or 8-hour — gallery performances, where the piece can evolve slowly, so anyone in Europe reading this who is interested, should talk to me.

Looking to find more from Sarah GHP? Check out her website, follow hi codie on Instagram, and watch the guest tutorial showing off her live video rig!

‘assimilating' - Photo by S20

Mapping Festival 2024 interview with Hiroaki Umeda

One of the performers at Mapping Festival 2024 was the choreographer / dancer Hiroaki Umeda who performed their dance piece Median. Sadly our team didn’t arrive in Geneva until the following week, but fortunately we were able to connect with them over email for a quick interview!

1. Who are you and what do you do?

I am a choreographer, dancer, visual artist from Japan, based in Tokyo. Most of my dance pieces, I do choreography, composing the sound, visual design. As extension of my dance pieces, I also make visual installations.

2. What tools (hardware, software, and other) do you use in your work?

For sound creation, recently I have changed to Ableton Live. For visual creation, programmers in the production team use different tools such as Touchdesigner, openFrameworks, houdini etc. For the operation of dance performance, Logic X, Max, VDMX and Touchdesigner.

3. Tell us about your latest performance at Mapping Festival!

The title of the piece was ‘Median’ that is solo dance piece with video projections. I myself dance in the piece. The concept of the piece was choreographing micro scale visual materials and human body. As a choreographer, I have ambition to choreograph cells or some micro scale objects. From that, I made this dance performance. The programmers used a lot Voronoi program with Houdini and oF.

‘Median’ - Photos by S20

4. Can you tell us about your process for designing videos to use as part of dance? Does one come before the other?

First setting basic concept of show, key visual and key choreography. It is like parallel processing. For me designing visual materials including lighting, costume and etc is a part of choreography.

5. You have been working in this area for more than 10 years, what things have changed and what things are still the same about your workflow?

At the beginning of my career 20 years ago, I did all elements of the creation, sound, visual and choreography. with the time, the programming had gotten more and more complicated and specialized. So I needed to work with programers for visual elements and engineers for other technical parts like sensors. That was about 10 years ago. But recently, I see the softwares and programming have been developed a lot and designed more for wide range users. I can easily use sophisticated and complicated program by myself. For the latest my dance piece, I did all the creation like my beginning of my career by myself with Touchdesigner, Ableton Live and Azure kinect thanks to all the developers!

Want to see more work from Hiroaki Umeda? You can find them on YouTube and on http://hiroakiumeda.com

Dispatch from Mapping Festival 2024

Once again we have traveled across the Atlantic to join the Mad Mapper team for the infamous Mapping Festival in Geneva! As always it was a fantastic time getting to spend time with amazing visual artists from all over the world, seeing some mind blowing performances, and participate in workshops showing off the latest techniques in live visuals and projection mapping. We leave having spent time reconnecting with old friends, meeting new ones, and eagerly awaiting our next chance to come back!

For those who could not make it to Geneva this year we have published the slides and accompanying notes from our two day workshop on getting started with using GLSL and ISF for creating real-time visuals and effects to use in apps like VDMX and Mad Mapper, which you can find here: https://bit.ly/isf-mapping-2024

We also have some photos to share! Geneva is a beautiful city and an absolute joy to visit.

And be sure to follow us on instagram for more live updates and pictures from events such as this!

Lighting and visuals for m83 in Brisbane

Who is the legendary Sarah Landau?

The circles of lighting designers for tours is surprisingly tight knit, and one of the names that will keep popping up is Sarah Landau. Along with being one of the most talented production designers and performers in the game right now, she’s also great found a way to find some balance between the worlds of nonstop touring and getting to enjoy life, which anyone who has done it will tell you is no easy task. When we first met Sarah back in 2013 she was on the road with Passion Pit and already well established as one of the top up and coming LDs in the game – since then she’s worked with m83, Grimes and a bucket full of other amazing musicians… and now we’ve finally gotten hold of her for a minute between jumps for a quick interview about her latest gigs and world travels!

Read MoreArt and projection mapping with Lucinda Dilworth.

Lucinda Dilworth, a digital artist following her Greek origins, is aiming to evolve as an artist to suit the modern and technological-driven world.

Her fusion of creativity, innovative thinking, and a deep understanding of the digital landscape sets Lucinda apart and drives her to push boundaries and explore the intricate relationship between art, technology, and artificial intelligence

Read MoreVideo and Light: an Interview with Derrick Belcham

Derrick Belcham is a Canadian filmmaker based out of Brooklyn, NY whose internationally-recognized work in documentary and music video has led him to work with such artists as Philip Glass, Steve Reich, Laurie Anderson, Paul Simon and hundreds of others in music, dance, theater and architecture. He has created works and lectured at such institutions as MoMA PS1, MoCA, The Solomon R. Guggenheim Museum, The Whitney Museum Of American Art, Musee D'Art Contemporain, The Philip Johnson Glass House, Brooklyn Academy of Music and The Contemporary Arts Center of Cincinnati. His work regularly appears in publications such as The New York Times, Vogue, Pitchfork, NPR and Rolling Stone as well as being screened at short, dance and experimental festivals and retrospectives around the world.

Who are you, and what do you do?

I’m a multi-disciplinary, collaborative artist that generally focuses on moving images of some kind or another. I’ve made hundreds of films in performance documentary and traditional “music video”, but I usually use VDMX for the fun stuff… experimental and live productions. Particularly, I love using VDMX for its audio-reactivity tools with video files and DMX lighting systems.

With it, I built the lighting and projection design of three of my large-scale immersive productions in the 60,000 sq ft behemoth of the Knockdown Center a few years back, the last with 300+ custom lighting heads all triggered to the sound of musicians and actors inputted into VDMX. It’s a really amazing environment to map out and deploy the technology of a piece, and allow for so many indeterminate, playful outcomes as you do.

What tools do you use?

I like a combination of analog and digital tools in my process, or else the feeling of “transience” gets a bit too prevalent for me… I like to start with a notebook and pen with most things, imagining then designing specifics of a system. Sometimes a very specific image will appear, and then the process would be working backwards through a set of problems that allow me to reach that vision.

I use Red digital cinema cameras and Leica R glass (digital + analog marriage made in heaven) along with a Fujifilm GFX 100S for stills on the digital side, and then a Braun Nizo 8mm and a number of weird/wild 35 and 120 cameras on the purely analog side. I have a lot of different filters, pre-made and homemade, for certain effects and really like to try and keep as much as possible “in-camera” before it makes its way onto the computer.

With VDMX, my essential is an ENTTEC DMX interface and various dimmers and controllers to create custom shapes alongside traditional lighting heads. I use projectors quite heavily as well along with even haze to conjure all those Anthony McCall-type ephemeral shapes…

Last year, I made two projects with Bing & Ruth for the release of their latest, Species. David is an old friend, and I wanted to design a system in VDMX that he could operate almost like an EMS Spectron or one of the super early oscilloscope visual synths… I set up two stacked projectors in my darkened studio, hazed it to Irish winter dawn levels and put David in front of a set of knobs and sliders that shifted the shape, opacity, color and speed of the outputs from VDMX. A camera faced these shifting, volumetric rays and then displayed them in an inverted monitor (also in VDMX) so that he could “play” along to his song in realtime. The final video is one, unedit sequence of David’s visual performance.

Past Work:

I’ve utilised VDMX as the driver/processor of the lighting and video-reactivity on videos with Dave Gahan, Julianna Barwick, Blonde Redhead, My Brightest Diamond, Simon Raymonde and so many more the last 10 years that I’ve been using the program.

You can see them all at derrickbelcham.com

Recent and Upcoming Projects:

This last June, I went to Iceland to collaborate on two new pieces with Bergrun Snaebjornsdottir and Þóranna Dögg Björnsdóttir which took me all over the island collecting visual samples from the natural environment. As I went, I took photos of instances of pareidolia any time they hit me (faces in the rocks, symbols in the clouds, etc) and came back with a large repository. For a new art-metal project with Brooklyn composer Brendon Randall-Myers, I decided to mix those with the movements of a fantastic dancer named Jacalyn Tatro using a new sound-reactive process in VDMX. (That comes out this month, so I’ll send the link when it’s public! Sneak peek images here…)

Beyond that, I’m in the process of designing a new theatre piece fully within the VDMX environment that will use the performers visual and auditory input as the source for a kind of large-scale looping playground… very excited to keep experimenting with that these next few months. I love the power that the program has, but also how invisible it can be within a live production… it is such a beautiful thing when the audience can be held in a space of unknowing when it comes to the mechanisms of the illusion.

From Analog to Digital with Paul Kendall

An interview with Paul Kendall, a composer, producer and visual artist.

From the past to the present, Paul Kendall has a career innovating music and sound. We caught up with him to get his story and talk about his evolution into the visual frontier with VDMX.

I come from a free jazz/ musique concrète beginning to a sound engineer/ mixer middle, culminating in a deafish composer/ visual artist attempting the final writes!

Between 85-97 I helped set up a series of studios for Daniel Miller’s Mute Records in London. I worked as the in-house engineer contributing in varying degrees to artists: Depeche Mode, Wire, Nick Cave, Nitzer Ebb, Barry Adamson, Renegade Soundwave etc.

Also during my time at Mute I established a sadly short-lived label devoted to experimental electro-acoustic music, The Parallel Series.

Basement demo studio in Covent Garden, London, 1979. Technology too expensive so with 2 friends we used a couple of Revox 2 track tape-machines, a basic console and a WEM Copicat our only outboard effect.

Home demo studio London 1984. Technology relatively affordable, 16 track Fostex Tape Machine, Allen & Heath 16 input console, DX7 synthesiser, Drumtraks Drum Machine, unseen but there Great British Spring Reverb, Time Matrix 8 tap digital delay. Soon I added BBC Micro Computer running UMI midi sequencing software.

My first musical/ hardware love was the tape recorder; around 1960 when I heard the simple slow down/ speed up possibility of an early 3 speed reel to reel, so it was sound more than music which fascinated me, but a total pipe dream as was most of the interesting technology in the 60’s and 70’s. I settled with a tenor saxophone and making noise was finally between my lips. A brief stay at University of York allowed me to learn the Revox tape machine/ tape loops/ VCS3 synthesiser but once again on return to London access to technology was limited. I had a job for 9 years in a bank so I was able to fund setting up a small studio with 2 friends to learn basic band demo recording using two 2 track machines and bouncing between them.

In 1984 following the sudden death of my mother I decided to leave the bank and with a small inheritance bought the new very affordable Fostex B16 16 track analogue tape machine, Allen and Heath mixing console, a BBC Micro Computer based sequencer UMI, a Yamaha DX7 synthesiser, Drumtraks drum machine, and most importantly an 8 tap digital delay unit called Time Matrix (almost an instrument in itself, a dub persons dream) and finally a Great British Spring Reverb.

I locked myself away to learn and experiment and as good fortune happened Daniel Miller knew of my endeavours and asked me to help setting up a similar facility for the Mute artists to demo and the lesser known artists to record masters.

The studio developed over the next 20 years or so into a fully functioning 24 track facility. At the end of 1990 I became aware of a significant new development from America the arrival of computer based digital audio. It was the moment where I fully embraced the binary world, bought a MacIIcI computer with Sound Tools/ Digidesign software for the first time enabling audio to be edited/ copied/ reversed/ EQ’d/ processed all within the digital domain. This may seem facile in the face of the acceleration of technology available today but back then the affect was considerable.

Mute Main Studio London 1988. Named, Worldwide International. Studio Design by Recording Architecture, Photo by Neil Waving. This was the 2nd studio for Mute which I was involved in and was my home for the next 9 years. The first was based on my home studio, image 2, and was on the top floor of Rough Trade in Kings Cross, London.

Mute Programming Suite London 1988. Named, The Means of Production. Studio Design by Recording Architecture, Photo by Neil Waving. A second studio at Mute used initially for artists wishing to experiment and prepare their work before using the main studio.

One of the areas which benefited from digital audio and which liberated my approach was remixing. It was possible to perform lots of dub mixes on the console bouncing to DAT (previously the costs involved with analogue tape would have been prohibitive). These mixes could then be loaded into the computer and edited, this was a perfect example of performance/ dub being integrated into a final mix.

Since this period and until very recently my work was almost exclusively based on a Mac and Pro Tools or Logic software. However due to severe hearing/ frequency loss over the last 10 years I have been unable to pursue sound work creation on computer as with many digital processes it is possible that spurious ‘noise’ could be generated and I would be oblivious to it.

Refocusing I decided to revert to my earlier love of musique concrète so just using mechanically generated sound, springs, bits of metal etc. and using guitar pedals to process, so no computer involved. In addition to this I started experimenting with visuals, whilst my eyes still function!

I was searching for a method to work with visuals as I worked with sound. I began using a macro lens on my camera zooming in on small details of an object which is an equivalent of taking an existing sound and microscopically messing with it. I looked around for suitable software which could process the visuals and add a degree of performance too. This is when I discovered VDMX. I could slow down/ speed up/ reverse/ superimpose/ manipulate/ texturalyl shift visual material exactly the way with audio, and with the addition of a Korg NanoControl I could perform dubs on the visuals, great result. This set up has served me well for a couple of years and will continue to do so. Obviously I am a novice in the visual field which is in some ways liberating as I have never learnt the ‘rules’. The adage with sound; if it sounds right it probably is right can be applied to visuals/ light or so I maintain!

Home studio London 1990. Fully embracing the just available digital workstation from Digidesign. Originally called Sound Tools before evolving into Pro Tools. My set up was on a Mac IIcI, DAT machine, Atari 1040 running Creator midi software.

Home studio West Sussex 2021. In some ways this is the most powerful and tiniest set up of all. Mac Book Pro running Logic Pro, Final Cut and of course VDMX! Peripheral equipment: Shure SM7B, 8 channel mixer, Korg Nano Control studio, Zoom H6 6 track digital recorder.

Back last May after isolating since the beginning of March (my partner had Covid very early on) I was searching for some creative inspiration and got hold of a couple of iPad apps which process sound. I spent 3 intense days experimenting and improvising using springs and things and voice as source. These improvisation morphed through editing to a series of coherent pieces of music/ noise. An album, Boundary Macro, from these will be released on vinyl later on in the year on Downwards Records and published through a return to Mute Song. So far I have finished 3 videos to accompany the album all made with VDMX.

You can see more visual work from Paul on Vimeo or follow his Instagram. And if you’re curious to learn more about VDMX, visit our tutorials page to get started.