Catching bits of action from people using VDMX via Twitter is always great for us and one of the best parts is that we get to connect with people like Mike St. Jean who share with us photos and videos from their latest gigs with amazing musicians... along with the behind the scenes details of how it is all put together that we crave.

1. Who are you and what do you do?

I'm Mike St. Jean – I love music and technology and am always in search of ways to bring the two together and create new experiences for people. I have been working in the music industry and sort of constantly on tour since the summer of 2010, mostly designing and programming lighting and visuals for tours, festival shows, as well as music videos, and stand-alone and interactive gallery installations. I am still a musician first, playing drums forever in lots of projects. More recently I added playing keyboards in addition to programming and operating the playback systems for the Devin Townsend Project, and continue to do the lighting and visuals with them. While I have worked primarily in the 'Rock' world, with DTP, Steve Vai, Generation Axe, Anathema, TesseracT, Fear Factory, Gojira, NinjaSpy, I have also enjoyed being part of EDM shows, doing both lighting and VJ'ing. Wherever there's an experience to make, I'm excited to be involved!

2. What tools (hardware, software and other) do you use to make it happen?

For visuals, I am using VDMX and occasionally MadMapper. I started using VDMX in 2011 when I got a gig to provide visuals for a European tour I was also lighting. I had made all of the visuals but hadn't figured out yet how to get them out of the laptop in sync with the music. The first night on the tour bus I downloaded VDMX, learned it and sync'd it up to our playback. I figured if it was that intuitive it was worth digging deeper into. I have been using it ever since.

For lighting I primarily use ChamSys' solutions- consoles, breakout racks, and their MagicQ software. I have found it to be very intuitive and functional, and it plays nicely with VDMX and Ableton.

For playback DAW I use Ableton as much as possible, but have used Cubase, Logic, and ProTools for different projects when the artist prefers. Max4Live allows Ableton to offer some pretty deep functionality, like converting MIDI to OSC or DMX/Artnet, for starters.

You can do so much over Networks now; Ableton, VDMX, ChamSys can all communicate through MIDI, OSC, DMX etc. It's amazing.

I just recently picked up the BomeBox for a solution to my ever-growing touring world with DTP. It's "the solution for connecting and mapping devices via MIDI, Ethernet, WiFi, and USB". I've got 5 USB keyboard and other controllers going to the BomeBox via a USB-Hub, then run a cat5 to a network switcher/router combo. I used to be tethered by a 6-foot USB cable but now the two rigs can be any distance apart. We'll be adding another one on the next tour to facilitate sending midi across the stage, to change guitar patches on Fractal AxeFX units over MIDI.

For interacting with VDMX any device will work- that's part of the brilliance of it! I still primarily use traditional USB-MIDI devices, but have used TouchOSC over wifi, and DMX from the ChamSys console at Front-of-House, to do everything from cue'ing / ejecting videos, to mixing and limiting opacities to match the overall look and brightness on stage. Another great little piece of hardware for that is the Enttec Artnet-DMX box which can transmit or receive DMX to Artnet.

3. Tell us about your latest projects!

I am currently working on lighting design and hopefully visuals for an upcoming, as-yet unannounced tour. I am really excited to have the opportunity to be pushing my own boundaries, and working with some really creative friends. I've found myself a new studio space and will be working on a lot of R&D for the next 6 weeks, with projectors, LED screens, MadLight, intelligent lighting fixtures and of course VDMX. I've used VDMX in the past to send audio-reactive visuals to RGB light fixtures; I would really like to realize that on a much larger scale.

There are a couple projects in the early stages- a couple more music videos, and two VR projects. I am really excited to explore VR that combines live-action 360 video with projection mapping in the scene.

I've also just recently found out about the Cue List plugin for VDMX and am really excited to begin to involve this in my workflow. In the past I have built my own 'Playlist' Control Surface plugin, so that I could launch multiple videos/fades/effects etc, appropriate to each song in a live set. Hooking this up with SMPTE or MTC is a really promising development for how I use VDMX for pre-programmed concerts.

I am also working on finishing up my own music release. Eventually there will be a live performance element, and VDMX, ChamSys, Ableton will all be very important to that process.

Devin Townsend Project at Royal Albert Hall – probably the most epic use-case of mine. For this one-off event, I oversaw the making of the visuals and composed many of them myself; built and programmed the VDMX session and ran it through MadMapper. Ableton ran the playback, which triggered the VDMX files and cued all of the lighting, run on ChamSys MagicQ. We programmed the lighting earlier in the day and had an operator at FOH to watch the levels while the automation ran the cues themselves. Ableton also triggered all of my keyboard patch changes so I could just enjoy playing on the day.

Full write-up of that event is here: "The band, aided by 5,000 of their loyal fans, delivered one of the most unique productions the 19th century concert venue has ever witnessed." (All above images: Christie Goodwin www.christiegoodwin.com)

Another special night for me was Devin Townsend Project at Brixton Academy. We ran Ableton and VDMX again, this time VDMX had to trigger 3 videos per song, sent through Matrox Triple-head-to-go to the 4 different video elements on stage.

Steve Vai Passion & Warfare 25th Anniversary

Photo by Mike St. Jean

This project presented me with a new challenge- firstly, we were using ProTools, which proved to be as easy to integrate with VDMX as everything else to date ;)

Secondly, the band usually played to a click track, which meant the drummer would cue ProTools with a foot pedal, and ProTools would trigger VDMX, so the clips were always in sync with the band- things like special guests showing up on-screen and 'jamming' with the band in time with the music etc. To complicate matters though, there were a few songs where the drummer would stop the playback mid-song, the band would jam to a looping video in VDMX, which would have been cued when the first 'in-sync' video ran out and ended; and then the drummer would re-start the ProTools playback & click, which would then have to launch third video to continue to playback in time with the band. It took a bit of problem solving at the time, but since pretty much anything is possible in VDMX, I just built an adapted setup, and voila. Now the band has toured dates when I haven't been available, and the Monitor engineer and even the drum tech have been able to get VDMX running and fit (perspective transformed) to the day's unique video wall, all without any major hitch!

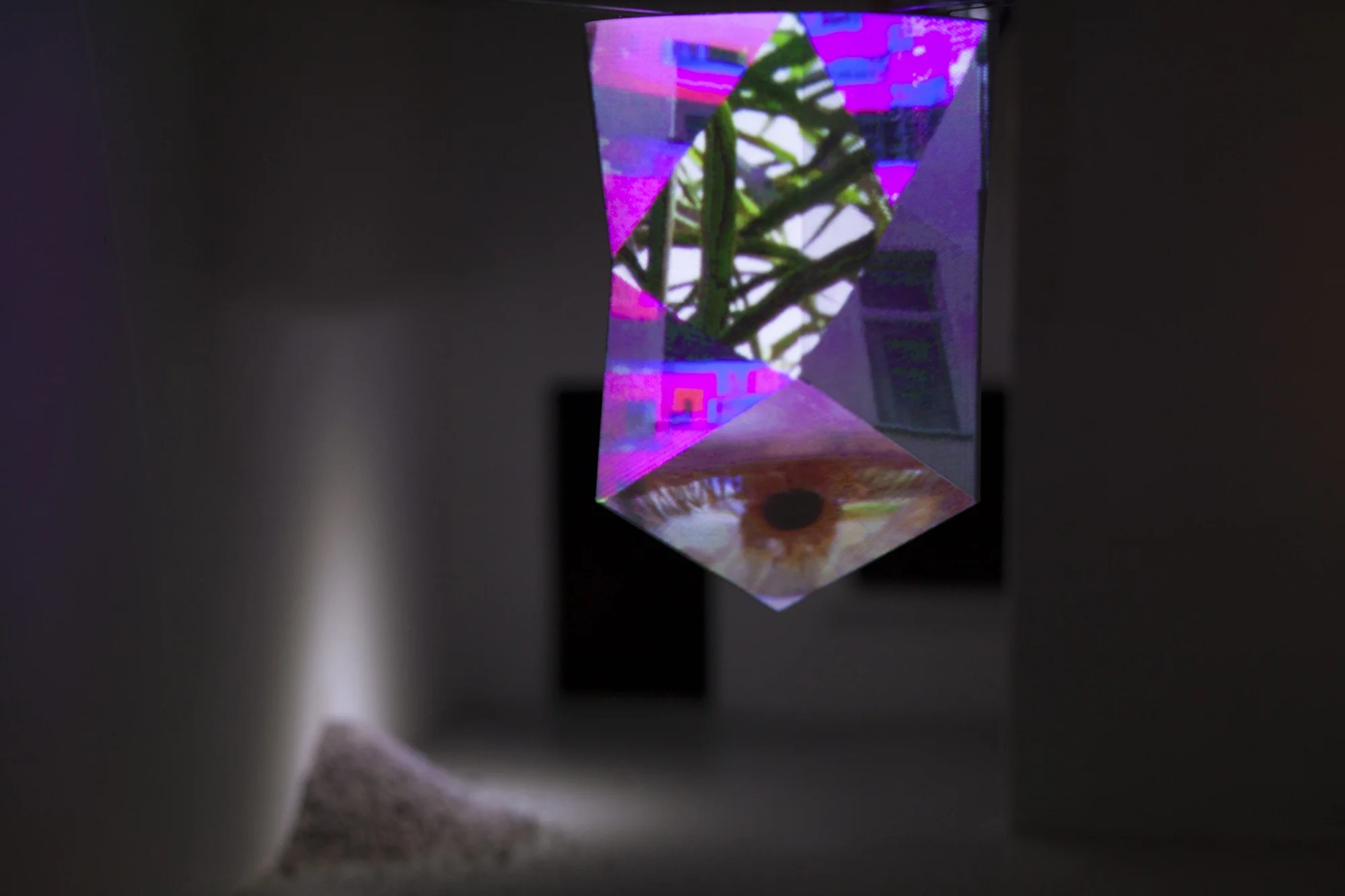

You Are Being Timed – Projection mapping installation. Collaboration with curator Ashlee Conery and multimedia artist Pawel Dwulit.

For this project, I teamed up with multimedia journalist Pawel Dwulit and curator Ashlee Conery, to apply tech and personal narrative. We used solely VDMX to map the shape of the obelisk I had designed, and to randomly various media pieces, as well as impose random distortion layer fx.

From the gallery write-up:

"What can be expected of a creator when he appropriates a sculpture produced by a 3D printer and designed by a designer, when he defies the public with "endurance performances" or when he tests the minimum transformations necessary for that an object becomes a work of art. Works created from, in and for the space of the two exhibitions will explore the interstices of an art between performance and interaction."

Ztv 3 Episode Mini-Series – Created to promote the release of Devin Townsend's Z2, and to accompany the live tour as part of the stage visuals, we conceptualized, designed, wrote, shot, edited and completed three episodes in collaboration with Devin.

I was responsible for:

- Production & coordination

- Logistics and execution of the live-projection of various background environments (no green screen) for which we used VDMX

- Practical lighting

- Live and on-site audio playback and recording [Ableton]

- Real-time interactive 'spaceship console' lighting for which we used VDMX with audio-reactive input from pre-recorded dialogue, 'The Collective', as it spoke to Ziltoid, this was triggered and played in Ableton, through Soundflower to VDMX, where it affected the opacity / intensity of a color layer which we used with the vide-capture-to-DMX plugin, to inform the color and intensity of an LED par can under the desk.

The (amazingly ridiculous) videos can be seen here:

Pretty epic right? If you want to keep tabs on the latest from Mike, be sure to follow him on Twitter / Facebook and bookmark his website!