In a recent blog post we dove into the results from various quality metrics comparing HAP alongside other video codecs. While we ran all of our tests using PSNR (Peak Signal-to-Noise Ratio), SSIM (Structural similarity index measure) and VMAF (Video Multi-Method Assessment Fusion), we did not go into much detail about what each of these three metrics actually mean and how they are used to evaluate the quality of image compression.

As a recap of those metrics:

PSNR: Peak Signal-to-Noise Ratio, measures full color quality across all channels. Measured in db, higher values are better.

SSIM: Structural similarity index measure measures structural similarity between images, considering luminance, contrast, and structure. Values range from 0-1, with 1 being identical.

VMAF: Video Multi-Method Assessment Fusion is Netflix's perceptual quality metric that correlates with human visual perception. Scores range 0-100, with higher being better.

Computing PSNR scores.

Whereas PSNR measures a direct numerical comparison of how lossy an image compression is, the SSIM and VMAF metrics take into account perceptual qualities such as structural similarity and contrast levels. While PSNR values are extremely useful, SSIM and VMAF are more tuned to how the human eye actually perceives color and brightness information. A high PSNR score doesn’t always mean high quality image compression, especially in real-world situations.

Fortunately in the case of HAP R we saw improvements in PSNR, SSIM, and VMAF compared to HAP and HAP Q, showing that it is a huge step forward in quality on all front, with the trade off being slower compression times because it is more computationally expensive to encode.

A deeply related topic are the different models and gamuts used to represent color information. While we tend to think of colors being used within an image processing pipeline such as VDMX as representing every pixel as an RGBA value, behind the scenes there is a lot more going on to make sure that the colors captured by your camera appear on screen the way you expect it to.

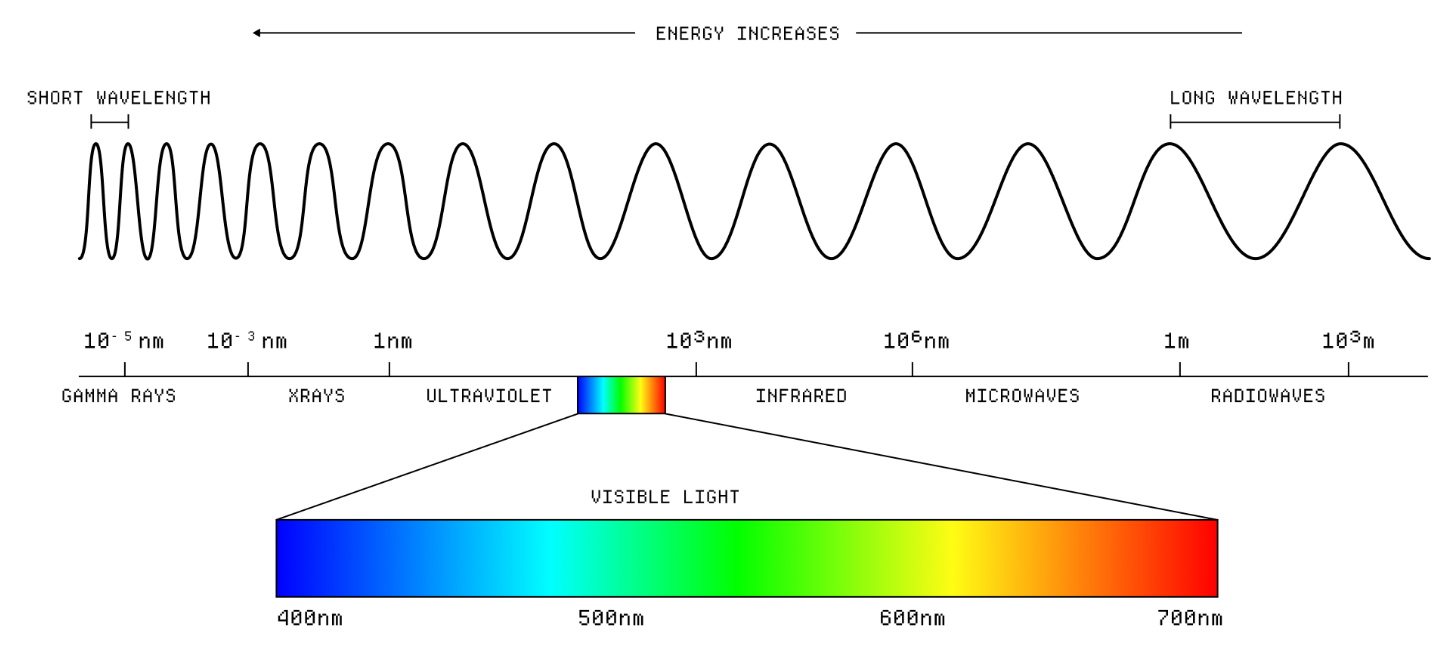

The Making Software blog (Written and illustrated by Dan Hollick) has a wonderful write up going into detail of ‘What is a color space?’, with an in depth lesson on ‘color’ as we know it exists in the real-world (wavelength of light), how those wavelengths are interpreted by our eyes (with three sizes of ‘cones’), how it is represented on your computer display (as pixels), and all about the different ways that colors are stored digitally to be optimized for different gamuts (ranges) of color.

“Digital color is about trying to map this complex interplay of light and perception into a format that computers can understand and screens can display.“

From Dan Hollick’s ‘What is a color space’ blog post, What is color? illustration.

While reading through the Making Software post, keep in mind how details like this come into play when talking about measuring compression quality with simple error based metrics like PSNR vs perceptual metrics like SSIM and VMAF,

“There is also a non-linear relationship between the intensity of light and our perception of brightness. This means that a small change in the intensity of light can result in a large change in our perception of brightness, especially at low light levels. You can see this in action in these optical illusions, where the perceived brightness of a color can change based on the surrounding colors.”

Optical illusion from ‘What is a color space’ – perceived brightness of a color can change based on the surrounding colors.

Another interesting historical example of color space usage based on technical requirements comes from the transition from the pre-digital black & white to color television is the YIQ colorspace for the NTSC standard. From the wikipedia page on NTSC,

“NTSC uses a luminance-chrominance encoding system, incorporating concepts invented in 1938 by Georges Valensi. Using a separate luminance signal maintained backward compatibility with black-and-white television sets in use at the time; only color sets would recognize the chroma signal, which was essentially ignored by black and white sets.

An image along with its Y′, U, and V components respectively (wikipedia)

The red, green, and blue primary color signals (R′G′B′) are weighted and summed into a single luma signal, designated Y′ which takes the place of the original monochrome signal. The color difference information is encoded into the chrominance signal, which carries only the color information. This allows black-and-white receivers to display NTSC color signals by simply ignoring the chrominance signal. Some black-and-white TVs sold in the U.S. after the introduction of color broadcasting in 1953 were designed to filter chroma out, but the early B&W sets did not do this and chrominance could be seen as a crawling dot pattern in areas of the picture that held saturated colors.”

While YIQ is not actually commonly used beyond NSTC, you are more likely to run into YUV, or one of its other variations. In fact, the HAP Q codec makes use of a similar colorspace, YCoCg, using a shader to quickly convert the pixel data to and from RGB as part of the pixel pipeline.

When we are talking about color representations in digital image / video codecs, the choice of a color space is part of the design considerations. When storing pixel data as YUV, more data is stored for the luma level than the chroma values when compared to RGB based codecs. This typically results in slightly lower color accuracy with the benefit of improved brightness and contrast accuracy. As the human eye is more sensitive to differences in brightness levels, it can be beneficial to use color spaces that are more tuned to these qualities in some cases. Similarly, when creating metrics that help us evaluate how noticeable image compression is to the human eye vs how well it performs numerically, these types of characteristics are taken into account. The amount of time it takes to convert to and from RGB is also something that needs to be considered when optimizing compression algorithms for real-time usage.

Beyond all this, oftentimes certain image processing FX may use a different color space for a variety of different reasons. For example, performing a hue shift is a difficult operation to perform using RGB values, but very easy to do when working in an HSV or HSL values. If you look at the code behind the Color Controls ISF, you’ll notice that each pixel is converted from RGB to HSV, adjusted based on the input parameters, and then converted back to RGB for the output.

Basic GLSL code from Color Controls.fs for converting RGB to HSV and HSV to RGB.

The end of Dan’s blog post on color spaces sums it all up very nicely,

“So what have we learned? Digital color is a hot, complicated mess of different color spaces, models, and management systems that all work together to try to make sure that the color that gets to your eyeball is somewhat close to what was intended.”

What more could we ask for?