For those of us who couldn't make it behind the stage during the LCD Soundsystem reunion show at Coachella 2016 to see how the audio reactive visuals worked, Nev Bull (aka master media server programmer from pixelsplus) and Stefan Goodchild have sent us these field report with details...

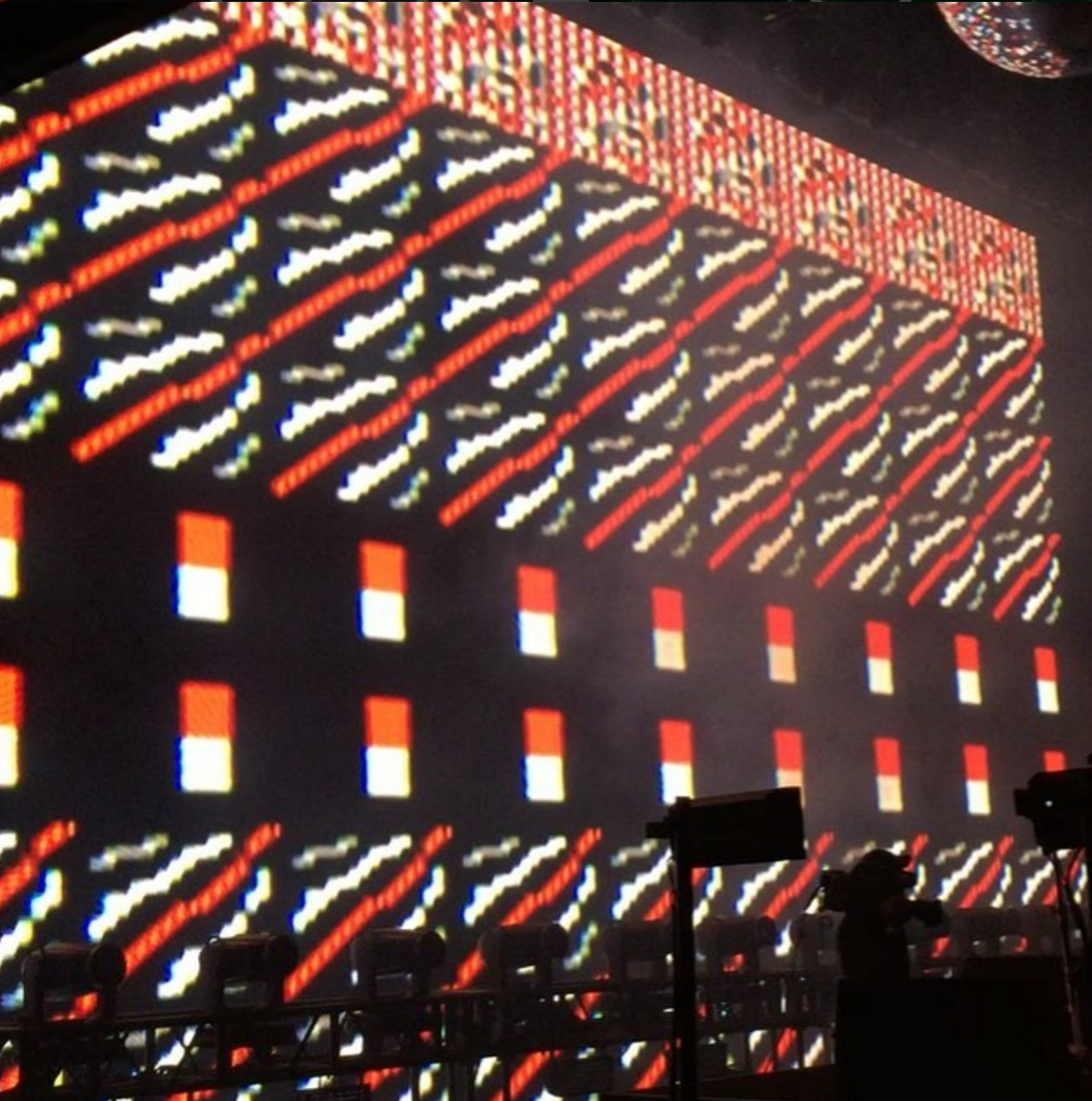

Rehearsal photo by Stefan Goodchild.

From Nev:

Show designer Rob Sinclair was asked by the band to create a system of live triggers - they do not use any form of timecode or playback during the show. They brought on Nev Bull to come up with a control package that could take any of the 64 channels of audio and 12 channels of drum triggers into VDMX. This audio was processed via VDMX's Audio Analysis plugin and data then treated using LFO's and step sequencers to give the required outputs.

The Audio Analysis function of VDMX is being used to run many of the lighting cues on LCD's 2016 tour - taking in up to 20 audio streams at one time and outputting Artnet and MIDI to the MA2 lighting console running the show. This allows the band to trigger strobes, lighting chases and other effects directly from their instruments and voices.

The VDMX server also sent MIDI and OSC commands to control the routing of the audio back out to a bank of video synthesisers, used to create the real time visuals. VDMX was also used to create MIDI control sequences to trigger the Ming Micro video synthesisers - creating step sequences controlled by audio inputs.

The whole show is set to run autonomously - with MIDI triggers coming from the lighting desk operator to setup the VDMX server's presets. A MADI interface was used to bring the audio channels in, and a Roland 16 input interface dealt with the drum triggers.

And some screen capture videos of all of that audio analysis in action? Yes!

Next you can go over to Stefan's blog to learn more about the analog synthesizer and oscilloscope systems that were used during the live show:

No custom software, no renders, just real hardware patched and played just how the band make the music on stage. I used a modular video synth based round the LZX Industries Visual Cortex unit to create audio reactive analog video designs. The rack is pictured below and 'patches' had to be saved by drawing on a printout of the unit facias to show what connects to what, and what settings to use. Also in the rack was a 3trinsrgb+1c analog video synth which I used in several songs.

Also used were two prototype Ming Micros which are 8 bit digital video synthesisers that create imagary and animations rereminiscent of the original Atari 2600 games console with similar limitations. These I controlled via MIDI using a custom VDMX layout to sequence and control the parameters. Content was designed using a pixel art package and a supplied Processing sketch that converts the png images into compatible text files which are read by the Ming.

To get a sense of what it'll all looked like on the other side head over to YouTube for some fan recorded videos from the crowd like these...