We’ve been emailing with Mojo Video Tech for about 15 years now, and his career goes back well before that… a full in depth interview is long overdue, but today we’ll have to settle for a quick behind the scenes of his latest project Meta-7, which is playing through May 18th, 2019, and you can still get tickets.

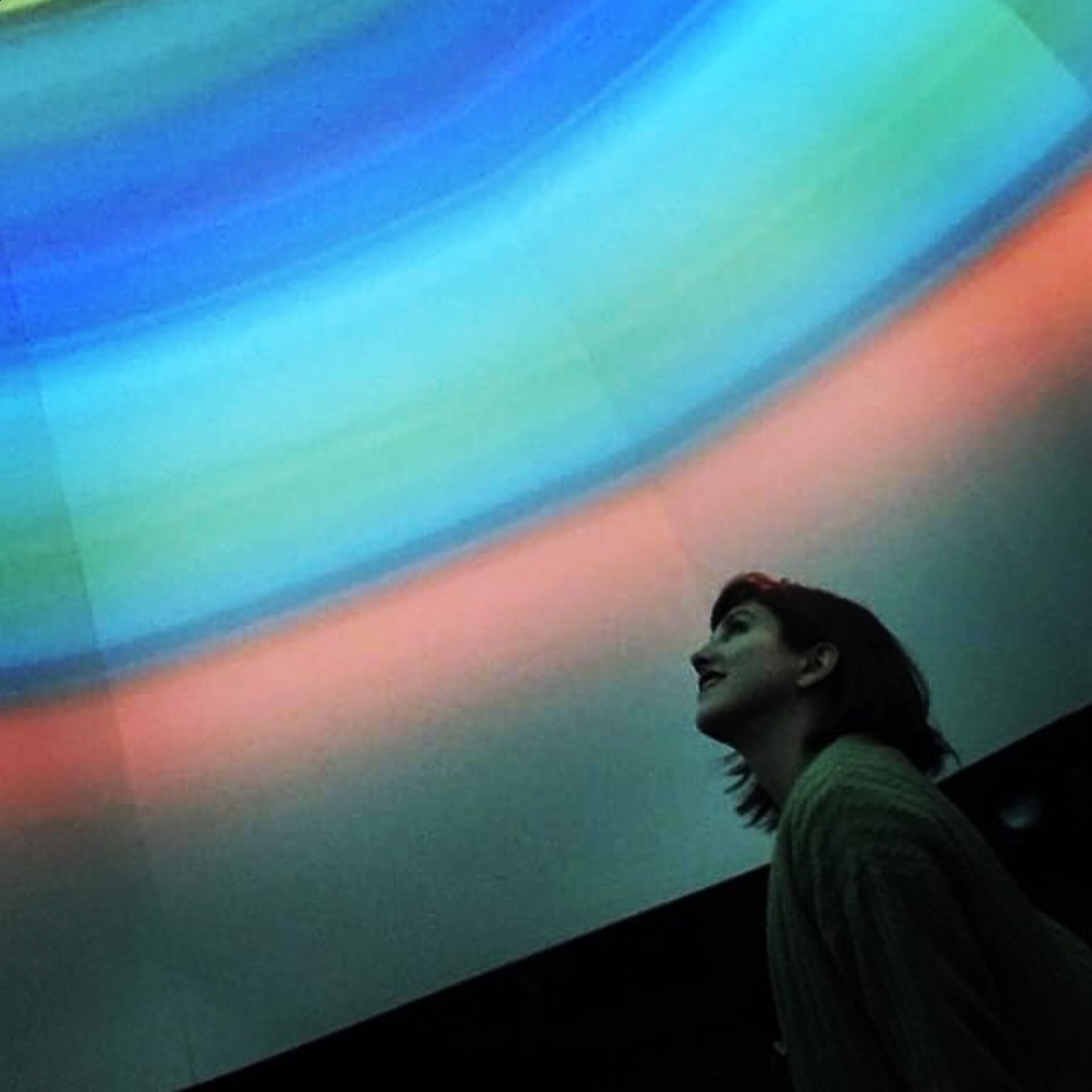

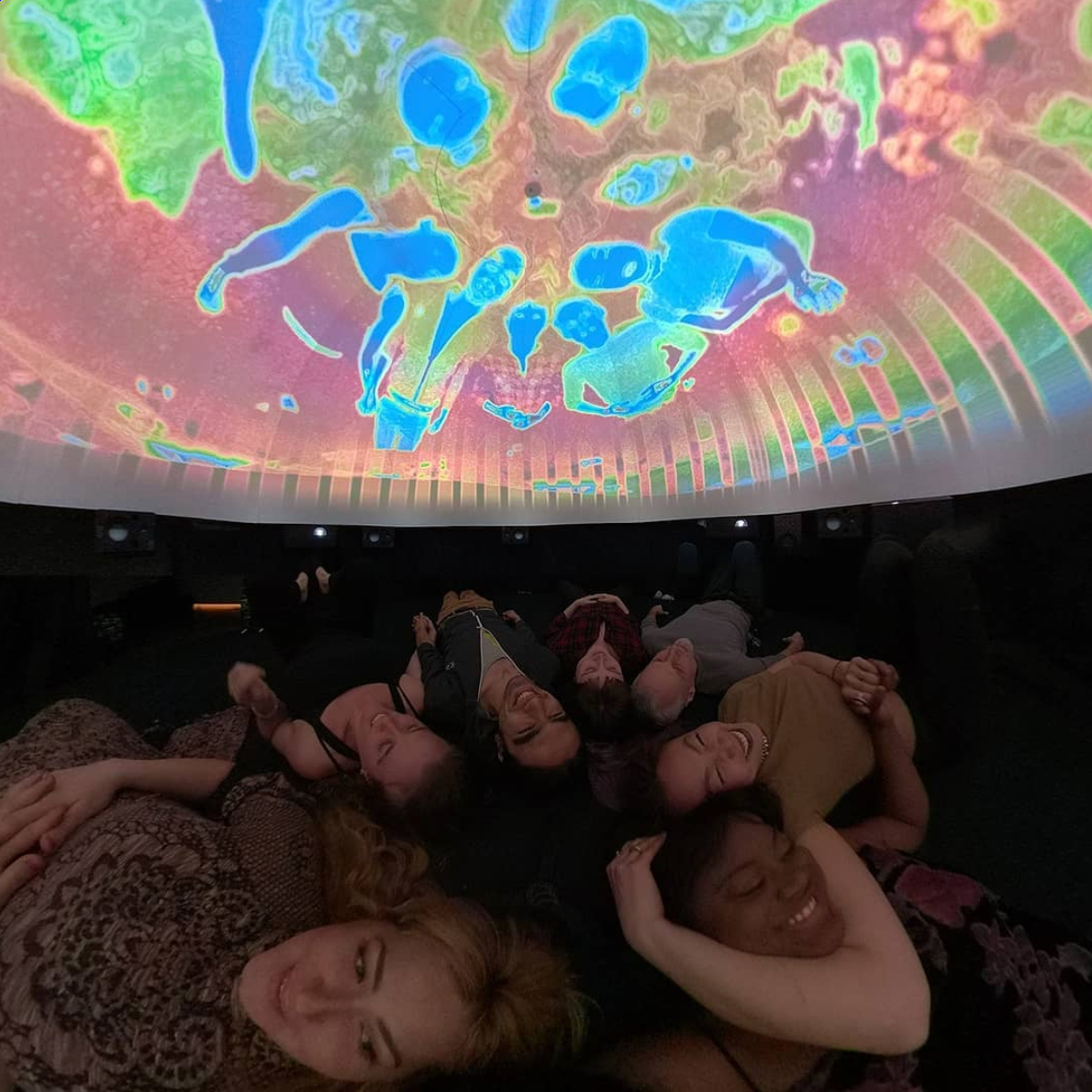

International Sound Artist John Sully teams up with XIX Collective and Mojo Video Tech to present, META-7 (an alchemic opera). Audience members lay on a sub-bass vibrating floor surrounded by a 7.1 sound system inside a 360° video mapped dome. The story is about all of us. Where do we come from? Why are we here? Where do we go after? Very much inspired by Sully’s album listening parties when he was a kid in the 70s, META-7 is a gesture towards the sacred and mysterious through a deep immersion of sound, light and architectural design. There will be 4 shows per night at 7,8,9 and 10pm. Please join us at 10-16 Studios, 10-16 46 Ave Long Island City NY.

Mojo Video Tech has also provided us with a description of how the setup works… if you plan to go, maybe wait until afterwards to read the rest of this to avoid any sensory spoilers.

The project began with the completed 8 channel sound track. A reference movie was created in after effects using the audio waves as a guide to mark transitions with the section labels, timecode and frame numbers rendered as text. This ref movie was used to quickly create a cue list with frame accurate timecode markers.

The sound track was mastered as 4 stereo tracks with 8 discreet channels of digital audio. We use QLab for audio playback, timeclock generator and show control. A Focusrite Scarlett 18i20 interface distributes the individual outputs to the dome's 7.1 speakers and haptic sub-bass floor, as well as providing a mix down for line input source driving audio reactivity. During the development phase and for the preview shows the QLab project was run on a MBP laptop and the visuals were mixed live in VDMX on a MacPro with a NVIDIA GTX960 providing one HD output for control monitor and two 4k outputs, one to an Atomos Shogun Inferno for recording and the other to a Datapath Fx4 feeding four Optoma Short Throw projectors. The canvas was set to a 2k domemaster format (2048x2048) with VDMX outputing fullscreen (copy) mode for the recorder and via Syphon to BlendyDome for mapping. Sync was done with MTC from QLab over network session to VDMX Timecode Plugin.

Ref movie with timecode and cue list

VDMX live mix / dev project, TouchOSC surface

The narrative structure of the show is a guided meditation so we have a solid color background layer which progresses though the color spectrum. Each color is accompanied by the corresponding binaural frequencies in the sound track. These pulsating tones drive the parameters on four layers of a polar gradient shader using 10 band audio analysis paired with a multi-slider control surface plugin for easy ctrl-drag data source assignment. This mix was recorded as the base layer that the rest of the visuals were then built on top of. A TouchOSC surface on an iPadPro was used for live mixing.

Audio interface control, QLab, Blendydome

VDMX playback / show project, QLab, Blendydome

Polar gradient ISF code and data source mapping to audio analysis

All the video content is code, (with exception of a live camera feed in the opening scene), so the original idea was to create an entirely generative piece. The show would consist of audio playback, timecode triggered mix automation and real-time audio reactive shaders. The build process began with the standard method for developing visuals for a sound track, which is to improvise, repeat and refine the visuals, create a new preset for each transition and save successive versions of the project as it evolves. The intention was to then rebuild all the live mixing elements using VDMX’s wide array of automation plugins including the Cue List, LFOs, Clocks + Number FX, Data Looper, etc.. However we soon realized that the density, complexity and shear number of interactions involved in a 43 minute live VJ mix was so great that emulating the performance with programming was not feasible.

The best of the live takes were assembled in After Effects and rendered out in sections for playback. The final show is a fully automated "go-button" system with all software running on a single media server.

And if you’d like to see a bit more of it in action, here are some photos from the events and rehearsals…

Can’t wait to see more from this collaboration of artists! For the latest and more, make sure to follow Mojo Video Tech on Instagram and his shader collection the ISF sharing site. And don’t forget you can still get tickets to Meta-7!